.avif)

.avif)

This analysis is drawn from our Executive's Guide to Code Agents. While this overview highlights key AI coding assistants available today, the complete guide offers in-depth evaluations, implementation strategies, and ROI frameworks to support your decision-making process. To download and read it, click here.

The explosion of interest in AI coding tools has led to a rich landscape of options. Broadly, these can be divided into commercial closed-source products and open-source projects/frameworks. Both categories aim to provide similar AI coding assistance, but they come with different philosophies and trade-offs. Enterprise leaders should understand these differences, as they affect everything from security to cost to flexibility. Below, we compare the two categories and highlight notable examples of each.

Closed-source AI coding assistant are typically developed by major tech companies or well-funded startups and are offered as proprietary services (often SaaS or licensed software). They tend to provide polished user experiences and integrate deeply with specific platforms or ecosystems. A few prominent examples:

The most famous AI pair-programmer, Copilot integrates into VS Code, Visual Studio, JetBrains, etc. It’s powered by OpenAI’s Codex and GPT-4 models, trained on GitHub’s massive code corpus. Copilot offers real-time code suggestions and a chat assistant (“Copilot Chat”). It’s a paid service (subscription per user) and requires sending code to Microsoft/OpenAI’s cloud for inference. Copilot is well-loved for its ease of use and quality of suggestions, but some enterprises are wary of code leaving their environment. GitHub has introduced a Copilot for Business with policy controls to address these concerns.

Announced in 2024, Amazon Q is AWS’s entry into AI coding assistants. It evolved from Amazon’s CodeWhisperer. Q Developer integrates with JetBrains IDEs and VS Code via a plugin, and uniquely also provides a CLI agent. It’s designed to handle large projects and multiple tasks: “/dev” agents that implement features with multi-file changes, “/doc” agents for documentation and diagrams, and “/review” for automated code review. Being an AWS product, it ties in with AWS cloud services (with IAM control, cloud APIs access, etc.), making it attractive to companies already building on AWS. It’s closed-source and offered as a managed service (with usage-based pricing). AWS highlights its enterprise-grade security, since Amazon Q can be configured to not retain code and works within AWS’s compliance environment.

Google’s solution, part of its broader Duet AI, became generally available in 2024. Gemini Code Assist uses Google’s cutting-edge Gemini LLM (which is optimized for code) . It offers code completion, chat, and code generation, and is integrated into Google Cloud’s tools (Cloud Shell, Cloud Workstations) as well as popular IDEs via plugins. One distinguishing feature is that it can provide citations for the code it suggests (helpful for developers to verify suggestions). Google has aggressively priced this – free for individual developers (with high monthly limits) – to encourage adoption, and offers enterprise tiers with admin controls. Closed-source and hosted on GCP, it appeals to Google Cloud customers and those who trust Google’s AI capabilities.

Tabnine is a widely-adopted AI coding assistant that stands out for its focus on privacy and personalization. It integrates with all major IDEs and uses ethically sourced training data with zero data retention policies to protect code confidentiality. What makes Tabnine unique is its ability to learn from your codebase and team patterns to provide contextual suggestions while enforcing coding standards. The tool supports switchable large language models - you can use Tabnine's proprietary models or popular third-party options. It works across 30+ programming languages and can generate everything from single-line completions to entire functions and tests. Its contextual awareness and ability to create custom models trained on specific codebases have made it particularly valuable for teams working with proprietary code.

Cognition AI's Devin is a commercial AI coding agent that aims to function as a complete software engineer, operating in a controlled compute environment with access to terminal, editor, and web capabilities. The system can tackle development tasks through natural language commands, showing users its implementation plan and executing code while maintaining context. What's notable is its ability to search online resources and adapt based on feedback - though all work happens within their proprietary sandbox environment. Early benchmarks reported impressive results, claiming 13.86% of bugs fixed autonomously. The system can handle tasks ranging from quick website creation to deploying ML models, with recent versions adding multi-agent coordination capabilities. The solution works best for organizations comfortable with cloud-based development and who value autonomous capabilities over customization. However, developers who prefer more control over their tools and development environment, or teams working with sensitive codebases, might want to explore alternatives that offer more flexibility in terms of model choice and local execution.

Cursor is a new breed of AI-augmented IDE. It’s essentially a code editor (forked from an open-source editor) with AI deeply integrated. Cursor offers an “agent mode” where you can give it a high-level goal and it will attempt to generate and edit files to meet that goal, including running code and iterating – a very agentic approach. While the editor itself might use open components, the AI service behind Cursor’s coding assistant is closed (they likely use OpenAI/Anthropic models under the hood). It’s a subscription product targeted at power users who want an AI-first development environment.

Bolt.new is an AI-powered web development coding assistant accessible via browser. It lets users “prompt, run, edit, and deploy full-stack apps” just by describing what they want. It went viral as a demo of flow-based coding with AI (one could type “build a to-do app” and Bolt.new scaffolded it live). While there is an open-source core (StackBlitz has an OSS version called bolt.diy), the hosted Bolt.new service and its specific models are proprietary. It’s an example of a domain-specific coding assistant (focused on web apps) offered as a service.

v0 is Vercel's AI-powered UI generator for creating React components and Tailwind CSS styling through natural language prompts. The tool excels at quickly producing polished interfaces using shadcn UI components – just describe what you want and it generates the corresponding code. While it's tightly integrated with Vercel's deployment infrastructure and can instantly create custom subdomains, you're locked into their ecosystem and pricing model. The tool handles frontend tasks well but doesn't touch backend logic. Teams already using Vercel's stack often praise its streamlined workflow and code quality, though developers should consider whether they want their UI generation capabilities tied to a single vendor's platform.

Replit AI is a suite of coding tools integrated directly into Replit's cloud IDE. It combines an Agent for generating entire projects from descriptions, and an Assistant for explaining code and making incremental changes. The system can handle everything from creating full-stack applications to fixing bugs and adding features through natural language interaction. What sets it apart is the seamless cloud integration - there's no setup needed, and you can go from idea to deployment within their platform. The Agent can build complex features automatically, while the Assistant acts like a coding buddy that understands your codebase's context. While powerful, users note its context retention could be improved, and it occasionally loses track of earlier conversations. The system is trained on public code and tuned by Replit to understand project context and framework choices.

Lovable is an AI development platform that converts natural language into working web applications. Its standout feature is handling the entire development stack - from UI to backend - through a chat interface. The platform leverages LLMs (from Anthropic and OpenAI) to translate English descriptions into functional code, while integrating with popular services like GitHub and Supabase. Users can modify any element through text commands with its Select & Edit feature, and even convert Figma designs or website screenshots into working applications. While primarily focused on web development, Lovable has gained traction among entrepreneurs and product teams for rapid prototyping. The trade-off: it may have limitations with complex architectures, but excels at quickly building standard business applications without requiring deep technical expertise.

These closed-source options typically offer convenience and reliability – they often “just work” out-of-the-box with minimal setup. They come with vendor support and usually integrate nicely if you’re within that vendor’s ecosystem (Microsoft, AWS, Google, etc.). However, they have some drawbacks from an enterprise perspective: you have less transparency into how they work, limited ability to customize or self-host, and there may be concerns about data (code) leaving your controlled environment. Costs can also add up (e.g. $10-$20 per developer per month, or usage-based fees for heavy use). Vendor lock-in is a consideration: if you deeply adopt, say, Copilot with all its bells and whistles, switching later isn’t trivial.

On the other side, the open-source community has been incredibly active in the AI code assistant space. Many developers and organizations prefer open-source solutions for the flexibility, transparency, and potentially lower cost (no license fees, ability to run on your own hardware). Open-source AI coding assistant can often be self-hosted entirely within an enterprise’s network, alleviating data privacy concerns. Here are some notable open projects and frameworks:

Cline is an open-source autonomous coding assistant for VS Code. It has dual “Plan” and “Act” modes – the agent can first devise a plan (a sequence of steps to implement a request) and then execute them one by one, modifying code. Cline can read the entire project, search within files, and perform terminal commands. Essentially, it gives you an AI “dev team” inside your editor. Early users have been impressed with its ability to create new files and coordinate changes across a codebase automatically. Cline connects to language models via a specified API – you can plug in OpenAI GPT-4, or a local model of your choice. It’s free and extensible (written in TypeScript/Node). The benefit here is you control the model (and costs), and your code stays local while Cline works with it.

OpenHands is an open-source AI coding assistant that acts as a full-capability software developer. It can perform any task a human developer would do - from modifying code and running commands to browsing the web, calling APIs, and even sourcing code snippets from StackOverflow. The platform is designed to tackle tedious and repetitive tasks in project backlogs, allowing development teams to focus on more complex and creative challenges. OpenHands features a comprehensive interface with multiple components: a chat panel for user interaction, a workspace for file management with VS Code integration, Jupyter notebook support for data visualization, an app viewer for testing web applications, a browser for web searches, and a terminal for command execution.

Aider is a popular open-source CLI tool for AI-assisted coding. It runs in your terminal and pairs with GPT models (you bring your API key for an LLM like GPT-4). What sets Aider apart is that it has write access to your repository – you give it one or multiple files, and it can modify them or even create new files based on a conversation. For example, you can say “Refactor these two files to use dependency injection” and Aider will edit both files accordingly. Thoughtworks praised Aider for enabling multi-file changes via natural language, something many other tools (especially closed ones at the time) didn’t support. Because it’s local and open, companies can use Aider without sending code externally (aside from model API calls, which can point to a self-hosted model). The trade-off: it’s a bit less user-friendly than IDE plugins – developers need to operate it via command line. Still, its fans call it “AI pair programming in your terminal.”

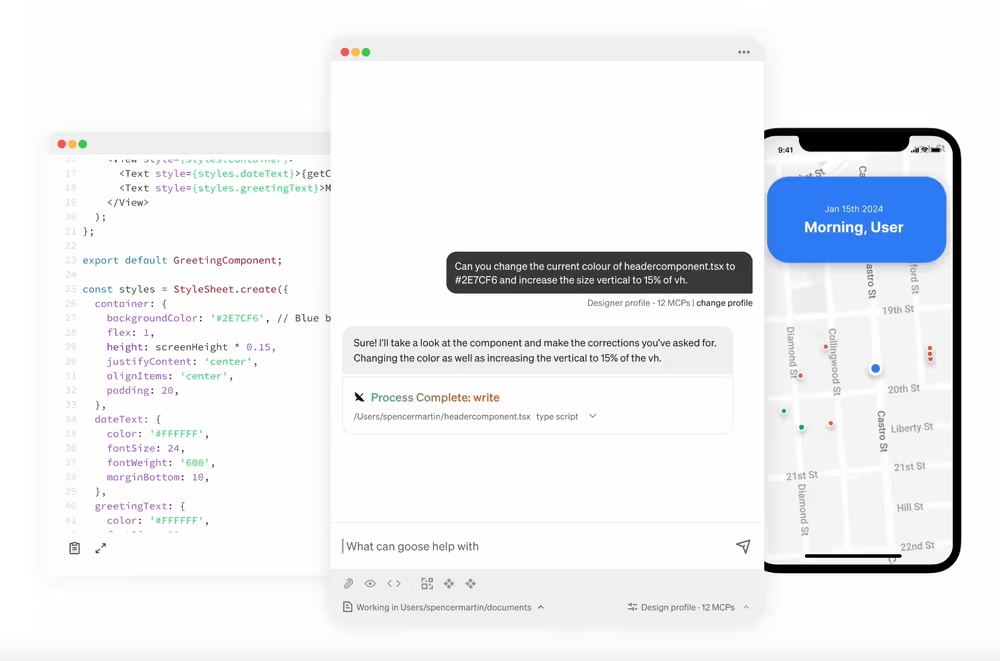

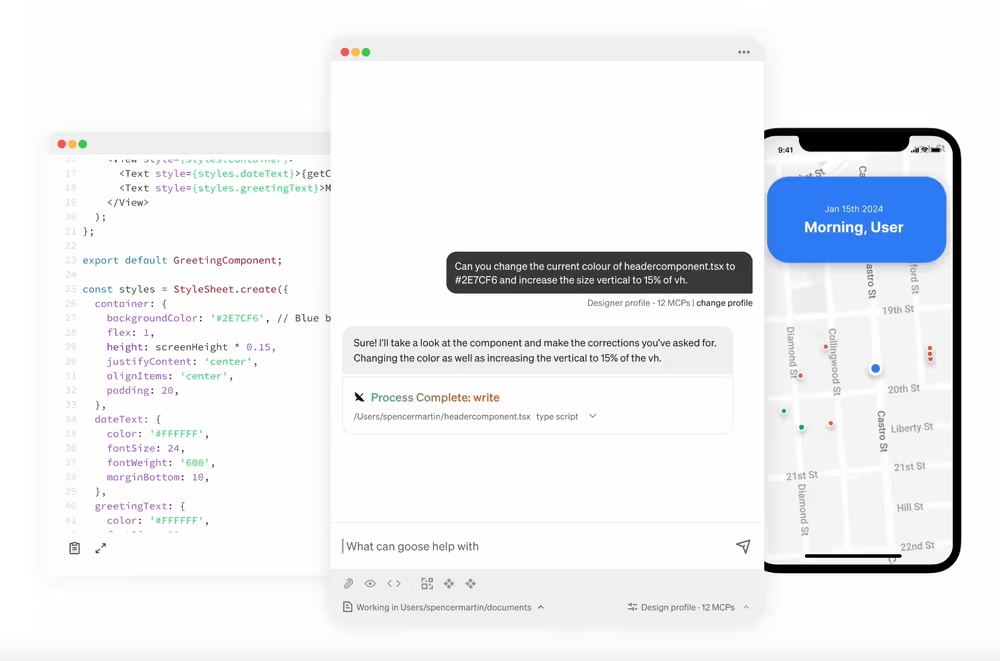

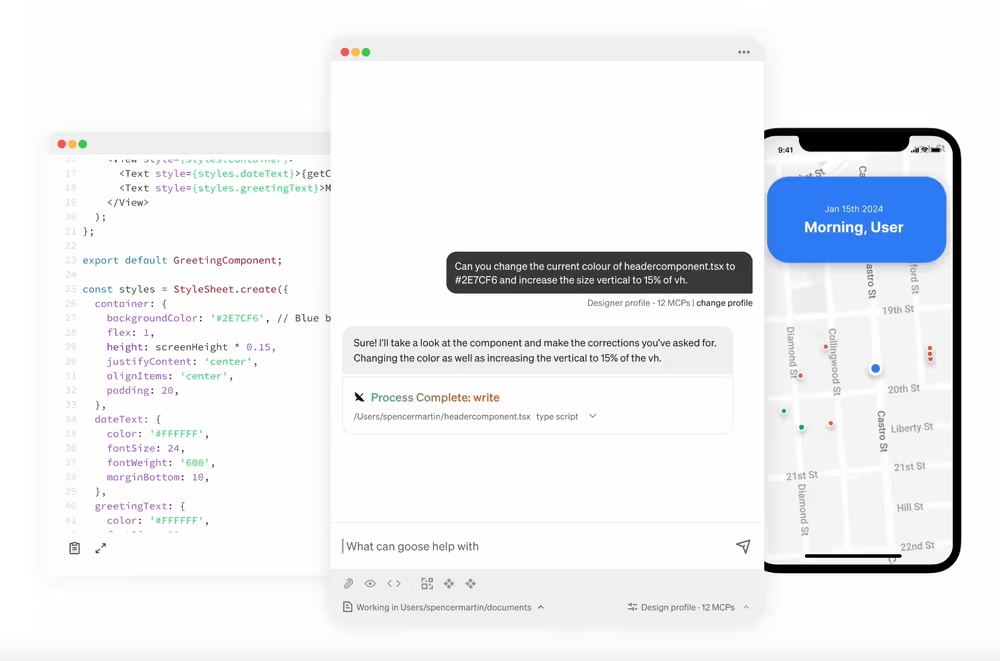

Goose, released by fintech company Block (formerly Square), is an open-source AI agent framework that can “go beyond coding”. It’s designed to be extensible and run entirely locally. Goose can write and execute code, debug errors, and interact with the file system – much like Cline or Cursor’s agent mode. Since it’s open-source (in Python, under the hood), enterprises can extend it or integrate it with their own tools. Goose emphasizes transparency: you can see exactly what the agent is doing, which commands it runs, etc. This is appealing if you need to enforce strict controls – nothing hidden in the cloud. Block open-sourced it to spur community collaboration on AI agents.

Continue is an open-source platform and IDE extension that has gained a lot of attention (20K+ GitHub stars by 2025) . It allows developers to create and share custom AI assistants that live in the IDE. Think of Continue as a framework: out-of-the-box it provides a VS Code and JetBrains plugin that can do code chat and completion using local or remote models. But it’s built to be highly configurable – developers can add “blocks” (pieces like prompts, rules, or integrations) and even create domain-specific agents. Continue’s recent 1.0 release introduced a hub where the community and companies can share their custom-built assistants and building blocks. This means an organization could, for example, create a specialized code assistant that knows about their internal libraries or coding style and share it to all devs via Continue. Notably, early enterprise users of Continue include companies like Siemens and Morningstar, indicating real-world viability. Being open-source, Continue can run fully in an enterprise environment, and it supports any model – local LLMs or cloud APIs – giving tremendous flexibility. As the founders put it, “the ‘one-size-fits-all’ AI code assistant will be a thing of the past” – Continue is about tailor-made AI for your team, rather than relying on a generic model only the provider can change.

Codeium is a distinctive AI code assistant that positions itself as an "open" alternative to proprietary solutions like GitHub Copilot. While not open-source in the traditional sense, it is free for individual developers and emphasizes privacy by not training on customer code. Developed by ex-Google engineers, Codeium offers plugins for numerous Integrated Development Environments (IDEs) and supports over 70 programming languages. In November 2024, Codeium introduced the Windsurf Editor, an AI-powered Integrated Development Environment (IDE) designed to enhance developer productivity by integrating advanced AI features directly into the coding workflow. For enterprises, Codeium provides self-hosted deployment options, allowing organizations to run the AI model within their own cloud infrastructure to maintain privacy.

In addition to full “assistant” frameworks, the open-source movement has produced many high-quality code-specialized models. Examples include StarCoder, CodeGen, PolyCoder, and Meta’s Code Llama. More recently, Alibaba released Qwen-14B-Coder (and iterative versions up to “Qwen 2.5 Coder”) which in late 2024 achieved top-tier code generation performance and was open for local use. There are also community-driven models like WizardCoder and Phind CodeLlama. An emerging trend is smaller models that are fine-tuned for specific languages or use cases, which organizations can run cheaply themselves. These models can plug into open-source agent frameworks like Continue or Aider. The likes of DeepSeek-R1 (a distilled model based on Qwen-14B, tailored for code) hint at a future where even mid-size models (10-15B parameters) perform impressively on code tasks. Open model availability gives enterprises an option to fully avoid external API calls – they can deploy these models on secured machines in a VPC, fulfilling the dream of “AI behind your firewall.”

Each approach has pros and cons. Here’s a side-by-side look at key considerations:

In practice, many enterprises adopt a hybrid approach. For instance, a team might use GitHub Copilot for general coding but employ an open-source tool like Aider for sensitive projects that cannot leave the intranet. Or use an open framework like Continue with both an internal model and occasionally route to an external API for particularly tough problems. The key is that open-source options provide leverage: they give enterprises bargaining power and technical options beyond what any single vendor offers. An open ecosystem also tends to innovate faster in niches – e.g., when a new programming language or framework arises, the community might build an AI helper for it before the big companies do.

Importantly, favoring open-source is not just a philosophical stance; it often yields practical benefits in security, cost, and flexibility. As Thoughtworks noted in their Technology Radar, open tools like Aider can directly edit multiple files across a codebase – a capability many closed tools lack – and since they run locally with your own API key, you pay only for the actual usage of the AI model, not a markup. This level of control and capability can be very attractive.

For enterprise leaders, the takeaway is: you have options. If vendor lock-in or data privacy is a concern, the open-route is viable and getting stronger every month. If immediate productivity out-of-the-box is paramount and you trust the vendor, the commercial products are mature and supported. Many organizations will mix and match to get the best of both worlds.

For teams looking to streamline this hybrid approach without the integration headaches, an operating system model—like Shakudo—makes it easy to deploy and manage both open and closed tools securely in your own environment. Everything works together out of the box, from AI coding assistant to local models and vector databases, so your team can stay focused on building.

Curious how it could fit into your stack? Book a demo or join our AI Workshop—a one-day session where we’ll help you map out the fastest path from proof of concept to real business value.

.avif)

This analysis is drawn from our Executive's Guide to Code Agents. While this overview highlights key AI coding assistants available today, the complete guide offers in-depth evaluations, implementation strategies, and ROI frameworks to support your decision-making process. To download and read it, click here.

The explosion of interest in AI coding tools has led to a rich landscape of options. Broadly, these can be divided into commercial closed-source products and open-source projects/frameworks. Both categories aim to provide similar AI coding assistance, but they come with different philosophies and trade-offs. Enterprise leaders should understand these differences, as they affect everything from security to cost to flexibility. Below, we compare the two categories and highlight notable examples of each.

Closed-source AI coding assistant are typically developed by major tech companies or well-funded startups and are offered as proprietary services (often SaaS or licensed software). They tend to provide polished user experiences and integrate deeply with specific platforms or ecosystems. A few prominent examples:

The most famous AI pair-programmer, Copilot integrates into VS Code, Visual Studio, JetBrains, etc. It’s powered by OpenAI’s Codex and GPT-4 models, trained on GitHub’s massive code corpus. Copilot offers real-time code suggestions and a chat assistant (“Copilot Chat”). It’s a paid service (subscription per user) and requires sending code to Microsoft/OpenAI’s cloud for inference. Copilot is well-loved for its ease of use and quality of suggestions, but some enterprises are wary of code leaving their environment. GitHub has introduced a Copilot for Business with policy controls to address these concerns.

Announced in 2024, Amazon Q is AWS’s entry into AI coding assistants. It evolved from Amazon’s CodeWhisperer. Q Developer integrates with JetBrains IDEs and VS Code via a plugin, and uniquely also provides a CLI agent. It’s designed to handle large projects and multiple tasks: “/dev” agents that implement features with multi-file changes, “/doc” agents for documentation and diagrams, and “/review” for automated code review. Being an AWS product, it ties in with AWS cloud services (with IAM control, cloud APIs access, etc.), making it attractive to companies already building on AWS. It’s closed-source and offered as a managed service (with usage-based pricing). AWS highlights its enterprise-grade security, since Amazon Q can be configured to not retain code and works within AWS’s compliance environment.

Google’s solution, part of its broader Duet AI, became generally available in 2024. Gemini Code Assist uses Google’s cutting-edge Gemini LLM (which is optimized for code) . It offers code completion, chat, and code generation, and is integrated into Google Cloud’s tools (Cloud Shell, Cloud Workstations) as well as popular IDEs via plugins. One distinguishing feature is that it can provide citations for the code it suggests (helpful for developers to verify suggestions). Google has aggressively priced this – free for individual developers (with high monthly limits) – to encourage adoption, and offers enterprise tiers with admin controls. Closed-source and hosted on GCP, it appeals to Google Cloud customers and those who trust Google’s AI capabilities.

Tabnine is a widely-adopted AI coding assistant that stands out for its focus on privacy and personalization. It integrates with all major IDEs and uses ethically sourced training data with zero data retention policies to protect code confidentiality. What makes Tabnine unique is its ability to learn from your codebase and team patterns to provide contextual suggestions while enforcing coding standards. The tool supports switchable large language models - you can use Tabnine's proprietary models or popular third-party options. It works across 30+ programming languages and can generate everything from single-line completions to entire functions and tests. Its contextual awareness and ability to create custom models trained on specific codebases have made it particularly valuable for teams working with proprietary code.

Cognition AI's Devin is a commercial AI coding agent that aims to function as a complete software engineer, operating in a controlled compute environment with access to terminal, editor, and web capabilities. The system can tackle development tasks through natural language commands, showing users its implementation plan and executing code while maintaining context. What's notable is its ability to search online resources and adapt based on feedback - though all work happens within their proprietary sandbox environment. Early benchmarks reported impressive results, claiming 13.86% of bugs fixed autonomously. The system can handle tasks ranging from quick website creation to deploying ML models, with recent versions adding multi-agent coordination capabilities. The solution works best for organizations comfortable with cloud-based development and who value autonomous capabilities over customization. However, developers who prefer more control over their tools and development environment, or teams working with sensitive codebases, might want to explore alternatives that offer more flexibility in terms of model choice and local execution.

Cursor is a new breed of AI-augmented IDE. It’s essentially a code editor (forked from an open-source editor) with AI deeply integrated. Cursor offers an “agent mode” where you can give it a high-level goal and it will attempt to generate and edit files to meet that goal, including running code and iterating – a very agentic approach. While the editor itself might use open components, the AI service behind Cursor’s coding assistant is closed (they likely use OpenAI/Anthropic models under the hood). It’s a subscription product targeted at power users who want an AI-first development environment.

Bolt.new is an AI-powered web development coding assistant accessible via browser. It lets users “prompt, run, edit, and deploy full-stack apps” just by describing what they want. It went viral as a demo of flow-based coding with AI (one could type “build a to-do app” and Bolt.new scaffolded it live). While there is an open-source core (StackBlitz has an OSS version called bolt.diy), the hosted Bolt.new service and its specific models are proprietary. It’s an example of a domain-specific coding assistant (focused on web apps) offered as a service.

v0 is Vercel's AI-powered UI generator for creating React components and Tailwind CSS styling through natural language prompts. The tool excels at quickly producing polished interfaces using shadcn UI components – just describe what you want and it generates the corresponding code. While it's tightly integrated with Vercel's deployment infrastructure and can instantly create custom subdomains, you're locked into their ecosystem and pricing model. The tool handles frontend tasks well but doesn't touch backend logic. Teams already using Vercel's stack often praise its streamlined workflow and code quality, though developers should consider whether they want their UI generation capabilities tied to a single vendor's platform.

Replit AI is a suite of coding tools integrated directly into Replit's cloud IDE. It combines an Agent for generating entire projects from descriptions, and an Assistant for explaining code and making incremental changes. The system can handle everything from creating full-stack applications to fixing bugs and adding features through natural language interaction. What sets it apart is the seamless cloud integration - there's no setup needed, and you can go from idea to deployment within their platform. The Agent can build complex features automatically, while the Assistant acts like a coding buddy that understands your codebase's context. While powerful, users note its context retention could be improved, and it occasionally loses track of earlier conversations. The system is trained on public code and tuned by Replit to understand project context and framework choices.

Lovable is an AI development platform that converts natural language into working web applications. Its standout feature is handling the entire development stack - from UI to backend - through a chat interface. The platform leverages LLMs (from Anthropic and OpenAI) to translate English descriptions into functional code, while integrating with popular services like GitHub and Supabase. Users can modify any element through text commands with its Select & Edit feature, and even convert Figma designs or website screenshots into working applications. While primarily focused on web development, Lovable has gained traction among entrepreneurs and product teams for rapid prototyping. The trade-off: it may have limitations with complex architectures, but excels at quickly building standard business applications without requiring deep technical expertise.

These closed-source options typically offer convenience and reliability – they often “just work” out-of-the-box with minimal setup. They come with vendor support and usually integrate nicely if you’re within that vendor’s ecosystem (Microsoft, AWS, Google, etc.). However, they have some drawbacks from an enterprise perspective: you have less transparency into how they work, limited ability to customize or self-host, and there may be concerns about data (code) leaving your controlled environment. Costs can also add up (e.g. $10-$20 per developer per month, or usage-based fees for heavy use). Vendor lock-in is a consideration: if you deeply adopt, say, Copilot with all its bells and whistles, switching later isn’t trivial.

On the other side, the open-source community has been incredibly active in the AI code assistant space. Many developers and organizations prefer open-source solutions for the flexibility, transparency, and potentially lower cost (no license fees, ability to run on your own hardware). Open-source AI coding assistant can often be self-hosted entirely within an enterprise’s network, alleviating data privacy concerns. Here are some notable open projects and frameworks:

Cline is an open-source autonomous coding assistant for VS Code. It has dual “Plan” and “Act” modes – the agent can first devise a plan (a sequence of steps to implement a request) and then execute them one by one, modifying code. Cline can read the entire project, search within files, and perform terminal commands. Essentially, it gives you an AI “dev team” inside your editor. Early users have been impressed with its ability to create new files and coordinate changes across a codebase automatically. Cline connects to language models via a specified API – you can plug in OpenAI GPT-4, or a local model of your choice. It’s free and extensible (written in TypeScript/Node). The benefit here is you control the model (and costs), and your code stays local while Cline works with it.

OpenHands is an open-source AI coding assistant that acts as a full-capability software developer. It can perform any task a human developer would do - from modifying code and running commands to browsing the web, calling APIs, and even sourcing code snippets from StackOverflow. The platform is designed to tackle tedious and repetitive tasks in project backlogs, allowing development teams to focus on more complex and creative challenges. OpenHands features a comprehensive interface with multiple components: a chat panel for user interaction, a workspace for file management with VS Code integration, Jupyter notebook support for data visualization, an app viewer for testing web applications, a browser for web searches, and a terminal for command execution.

Aider is a popular open-source CLI tool for AI-assisted coding. It runs in your terminal and pairs with GPT models (you bring your API key for an LLM like GPT-4). What sets Aider apart is that it has write access to your repository – you give it one or multiple files, and it can modify them or even create new files based on a conversation. For example, you can say “Refactor these two files to use dependency injection” and Aider will edit both files accordingly. Thoughtworks praised Aider for enabling multi-file changes via natural language, something many other tools (especially closed ones at the time) didn’t support. Because it’s local and open, companies can use Aider without sending code externally (aside from model API calls, which can point to a self-hosted model). The trade-off: it’s a bit less user-friendly than IDE plugins – developers need to operate it via command line. Still, its fans call it “AI pair programming in your terminal.”

Goose, released by fintech company Block (formerly Square), is an open-source AI agent framework that can “go beyond coding”. It’s designed to be extensible and run entirely locally. Goose can write and execute code, debug errors, and interact with the file system – much like Cline or Cursor’s agent mode. Since it’s open-source (in Python, under the hood), enterprises can extend it or integrate it with their own tools. Goose emphasizes transparency: you can see exactly what the agent is doing, which commands it runs, etc. This is appealing if you need to enforce strict controls – nothing hidden in the cloud. Block open-sourced it to spur community collaboration on AI agents.

Continue is an open-source platform and IDE extension that has gained a lot of attention (20K+ GitHub stars by 2025) . It allows developers to create and share custom AI assistants that live in the IDE. Think of Continue as a framework: out-of-the-box it provides a VS Code and JetBrains plugin that can do code chat and completion using local or remote models. But it’s built to be highly configurable – developers can add “blocks” (pieces like prompts, rules, or integrations) and even create domain-specific agents. Continue’s recent 1.0 release introduced a hub where the community and companies can share their custom-built assistants and building blocks. This means an organization could, for example, create a specialized code assistant that knows about their internal libraries or coding style and share it to all devs via Continue. Notably, early enterprise users of Continue include companies like Siemens and Morningstar, indicating real-world viability. Being open-source, Continue can run fully in an enterprise environment, and it supports any model – local LLMs or cloud APIs – giving tremendous flexibility. As the founders put it, “the ‘one-size-fits-all’ AI code assistant will be a thing of the past” – Continue is about tailor-made AI for your team, rather than relying on a generic model only the provider can change.

Codeium is a distinctive AI code assistant that positions itself as an "open" alternative to proprietary solutions like GitHub Copilot. While not open-source in the traditional sense, it is free for individual developers and emphasizes privacy by not training on customer code. Developed by ex-Google engineers, Codeium offers plugins for numerous Integrated Development Environments (IDEs) and supports over 70 programming languages. In November 2024, Codeium introduced the Windsurf Editor, an AI-powered Integrated Development Environment (IDE) designed to enhance developer productivity by integrating advanced AI features directly into the coding workflow. For enterprises, Codeium provides self-hosted deployment options, allowing organizations to run the AI model within their own cloud infrastructure to maintain privacy.

In addition to full “assistant” frameworks, the open-source movement has produced many high-quality code-specialized models. Examples include StarCoder, CodeGen, PolyCoder, and Meta’s Code Llama. More recently, Alibaba released Qwen-14B-Coder (and iterative versions up to “Qwen 2.5 Coder”) which in late 2024 achieved top-tier code generation performance and was open for local use. There are also community-driven models like WizardCoder and Phind CodeLlama. An emerging trend is smaller models that are fine-tuned for specific languages or use cases, which organizations can run cheaply themselves. These models can plug into open-source agent frameworks like Continue or Aider. The likes of DeepSeek-R1 (a distilled model based on Qwen-14B, tailored for code) hint at a future where even mid-size models (10-15B parameters) perform impressively on code tasks. Open model availability gives enterprises an option to fully avoid external API calls – they can deploy these models on secured machines in a VPC, fulfilling the dream of “AI behind your firewall.”

Each approach has pros and cons. Here’s a side-by-side look at key considerations:

In practice, many enterprises adopt a hybrid approach. For instance, a team might use GitHub Copilot for general coding but employ an open-source tool like Aider for sensitive projects that cannot leave the intranet. Or use an open framework like Continue with both an internal model and occasionally route to an external API for particularly tough problems. The key is that open-source options provide leverage: they give enterprises bargaining power and technical options beyond what any single vendor offers. An open ecosystem also tends to innovate faster in niches – e.g., when a new programming language or framework arises, the community might build an AI helper for it before the big companies do.

Importantly, favoring open-source is not just a philosophical stance; it often yields practical benefits in security, cost, and flexibility. As Thoughtworks noted in their Technology Radar, open tools like Aider can directly edit multiple files across a codebase – a capability many closed tools lack – and since they run locally with your own API key, you pay only for the actual usage of the AI model, not a markup. This level of control and capability can be very attractive.

For enterprise leaders, the takeaway is: you have options. If vendor lock-in or data privacy is a concern, the open-route is viable and getting stronger every month. If immediate productivity out-of-the-box is paramount and you trust the vendor, the commercial products are mature and supported. Many organizations will mix and match to get the best of both worlds.

For teams looking to streamline this hybrid approach without the integration headaches, an operating system model—like Shakudo—makes it easy to deploy and manage both open and closed tools securely in your own environment. Everything works together out of the box, from AI coding assistant to local models and vector databases, so your team can stay focused on building.

Curious how it could fit into your stack? Book a demo or join our AI Workshop—a one-day session where we’ll help you map out the fastest path from proof of concept to real business value.

This analysis is drawn from our Executive's Guide to Code Agents. While this overview highlights key AI coding assistants available today, the complete guide offers in-depth evaluations, implementation strategies, and ROI frameworks to support your decision-making process. To download and read it, click here.

The explosion of interest in AI coding tools has led to a rich landscape of options. Broadly, these can be divided into commercial closed-source products and open-source projects/frameworks. Both categories aim to provide similar AI coding assistance, but they come with different philosophies and trade-offs. Enterprise leaders should understand these differences, as they affect everything from security to cost to flexibility. Below, we compare the two categories and highlight notable examples of each.

Closed-source AI coding assistant are typically developed by major tech companies or well-funded startups and are offered as proprietary services (often SaaS or licensed software). They tend to provide polished user experiences and integrate deeply with specific platforms or ecosystems. A few prominent examples:

The most famous AI pair-programmer, Copilot integrates into VS Code, Visual Studio, JetBrains, etc. It’s powered by OpenAI’s Codex and GPT-4 models, trained on GitHub’s massive code corpus. Copilot offers real-time code suggestions and a chat assistant (“Copilot Chat”). It’s a paid service (subscription per user) and requires sending code to Microsoft/OpenAI’s cloud for inference. Copilot is well-loved for its ease of use and quality of suggestions, but some enterprises are wary of code leaving their environment. GitHub has introduced a Copilot for Business with policy controls to address these concerns.

Announced in 2024, Amazon Q is AWS’s entry into AI coding assistants. It evolved from Amazon’s CodeWhisperer. Q Developer integrates with JetBrains IDEs and VS Code via a plugin, and uniquely also provides a CLI agent. It’s designed to handle large projects and multiple tasks: “/dev” agents that implement features with multi-file changes, “/doc” agents for documentation and diagrams, and “/review” for automated code review. Being an AWS product, it ties in with AWS cloud services (with IAM control, cloud APIs access, etc.), making it attractive to companies already building on AWS. It’s closed-source and offered as a managed service (with usage-based pricing). AWS highlights its enterprise-grade security, since Amazon Q can be configured to not retain code and works within AWS’s compliance environment.

Google’s solution, part of its broader Duet AI, became generally available in 2024. Gemini Code Assist uses Google’s cutting-edge Gemini LLM (which is optimized for code) . It offers code completion, chat, and code generation, and is integrated into Google Cloud’s tools (Cloud Shell, Cloud Workstations) as well as popular IDEs via plugins. One distinguishing feature is that it can provide citations for the code it suggests (helpful for developers to verify suggestions). Google has aggressively priced this – free for individual developers (with high monthly limits) – to encourage adoption, and offers enterprise tiers with admin controls. Closed-source and hosted on GCP, it appeals to Google Cloud customers and those who trust Google’s AI capabilities.

Tabnine is a widely-adopted AI coding assistant that stands out for its focus on privacy and personalization. It integrates with all major IDEs and uses ethically sourced training data with zero data retention policies to protect code confidentiality. What makes Tabnine unique is its ability to learn from your codebase and team patterns to provide contextual suggestions while enforcing coding standards. The tool supports switchable large language models - you can use Tabnine's proprietary models or popular third-party options. It works across 30+ programming languages and can generate everything from single-line completions to entire functions and tests. Its contextual awareness and ability to create custom models trained on specific codebases have made it particularly valuable for teams working with proprietary code.

Cognition AI's Devin is a commercial AI coding agent that aims to function as a complete software engineer, operating in a controlled compute environment with access to terminal, editor, and web capabilities. The system can tackle development tasks through natural language commands, showing users its implementation plan and executing code while maintaining context. What's notable is its ability to search online resources and adapt based on feedback - though all work happens within their proprietary sandbox environment. Early benchmarks reported impressive results, claiming 13.86% of bugs fixed autonomously. The system can handle tasks ranging from quick website creation to deploying ML models, with recent versions adding multi-agent coordination capabilities. The solution works best for organizations comfortable with cloud-based development and who value autonomous capabilities over customization. However, developers who prefer more control over their tools and development environment, or teams working with sensitive codebases, might want to explore alternatives that offer more flexibility in terms of model choice and local execution.

Cursor is a new breed of AI-augmented IDE. It’s essentially a code editor (forked from an open-source editor) with AI deeply integrated. Cursor offers an “agent mode” where you can give it a high-level goal and it will attempt to generate and edit files to meet that goal, including running code and iterating – a very agentic approach. While the editor itself might use open components, the AI service behind Cursor’s coding assistant is closed (they likely use OpenAI/Anthropic models under the hood). It’s a subscription product targeted at power users who want an AI-first development environment.

Bolt.new is an AI-powered web development coding assistant accessible via browser. It lets users “prompt, run, edit, and deploy full-stack apps” just by describing what they want. It went viral as a demo of flow-based coding with AI (one could type “build a to-do app” and Bolt.new scaffolded it live). While there is an open-source core (StackBlitz has an OSS version called bolt.diy), the hosted Bolt.new service and its specific models are proprietary. It’s an example of a domain-specific coding assistant (focused on web apps) offered as a service.

v0 is Vercel's AI-powered UI generator for creating React components and Tailwind CSS styling through natural language prompts. The tool excels at quickly producing polished interfaces using shadcn UI components – just describe what you want and it generates the corresponding code. While it's tightly integrated with Vercel's deployment infrastructure and can instantly create custom subdomains, you're locked into their ecosystem and pricing model. The tool handles frontend tasks well but doesn't touch backend logic. Teams already using Vercel's stack often praise its streamlined workflow and code quality, though developers should consider whether they want their UI generation capabilities tied to a single vendor's platform.

Replit AI is a suite of coding tools integrated directly into Replit's cloud IDE. It combines an Agent for generating entire projects from descriptions, and an Assistant for explaining code and making incremental changes. The system can handle everything from creating full-stack applications to fixing bugs and adding features through natural language interaction. What sets it apart is the seamless cloud integration - there's no setup needed, and you can go from idea to deployment within their platform. The Agent can build complex features automatically, while the Assistant acts like a coding buddy that understands your codebase's context. While powerful, users note its context retention could be improved, and it occasionally loses track of earlier conversations. The system is trained on public code and tuned by Replit to understand project context and framework choices.

Lovable is an AI development platform that converts natural language into working web applications. Its standout feature is handling the entire development stack - from UI to backend - through a chat interface. The platform leverages LLMs (from Anthropic and OpenAI) to translate English descriptions into functional code, while integrating with popular services like GitHub and Supabase. Users can modify any element through text commands with its Select & Edit feature, and even convert Figma designs or website screenshots into working applications. While primarily focused on web development, Lovable has gained traction among entrepreneurs and product teams for rapid prototyping. The trade-off: it may have limitations with complex architectures, but excels at quickly building standard business applications without requiring deep technical expertise.

These closed-source options typically offer convenience and reliability – they often “just work” out-of-the-box with minimal setup. They come with vendor support and usually integrate nicely if you’re within that vendor’s ecosystem (Microsoft, AWS, Google, etc.). However, they have some drawbacks from an enterprise perspective: you have less transparency into how they work, limited ability to customize or self-host, and there may be concerns about data (code) leaving your controlled environment. Costs can also add up (e.g. $10-$20 per developer per month, or usage-based fees for heavy use). Vendor lock-in is a consideration: if you deeply adopt, say, Copilot with all its bells and whistles, switching later isn’t trivial.

On the other side, the open-source community has been incredibly active in the AI code assistant space. Many developers and organizations prefer open-source solutions for the flexibility, transparency, and potentially lower cost (no license fees, ability to run on your own hardware). Open-source AI coding assistant can often be self-hosted entirely within an enterprise’s network, alleviating data privacy concerns. Here are some notable open projects and frameworks:

Cline is an open-source autonomous coding assistant for VS Code. It has dual “Plan” and “Act” modes – the agent can first devise a plan (a sequence of steps to implement a request) and then execute them one by one, modifying code. Cline can read the entire project, search within files, and perform terminal commands. Essentially, it gives you an AI “dev team” inside your editor. Early users have been impressed with its ability to create new files and coordinate changes across a codebase automatically. Cline connects to language models via a specified API – you can plug in OpenAI GPT-4, or a local model of your choice. It’s free and extensible (written in TypeScript/Node). The benefit here is you control the model (and costs), and your code stays local while Cline works with it.

OpenHands is an open-source AI coding assistant that acts as a full-capability software developer. It can perform any task a human developer would do - from modifying code and running commands to browsing the web, calling APIs, and even sourcing code snippets from StackOverflow. The platform is designed to tackle tedious and repetitive tasks in project backlogs, allowing development teams to focus on more complex and creative challenges. OpenHands features a comprehensive interface with multiple components: a chat panel for user interaction, a workspace for file management with VS Code integration, Jupyter notebook support for data visualization, an app viewer for testing web applications, a browser for web searches, and a terminal for command execution.

Aider is a popular open-source CLI tool for AI-assisted coding. It runs in your terminal and pairs with GPT models (you bring your API key for an LLM like GPT-4). What sets Aider apart is that it has write access to your repository – you give it one or multiple files, and it can modify them or even create new files based on a conversation. For example, you can say “Refactor these two files to use dependency injection” and Aider will edit both files accordingly. Thoughtworks praised Aider for enabling multi-file changes via natural language, something many other tools (especially closed ones at the time) didn’t support. Because it’s local and open, companies can use Aider without sending code externally (aside from model API calls, which can point to a self-hosted model). The trade-off: it’s a bit less user-friendly than IDE plugins – developers need to operate it via command line. Still, its fans call it “AI pair programming in your terminal.”

Goose, released by fintech company Block (formerly Square), is an open-source AI agent framework that can “go beyond coding”. It’s designed to be extensible and run entirely locally. Goose can write and execute code, debug errors, and interact with the file system – much like Cline or Cursor’s agent mode. Since it’s open-source (in Python, under the hood), enterprises can extend it or integrate it with their own tools. Goose emphasizes transparency: you can see exactly what the agent is doing, which commands it runs, etc. This is appealing if you need to enforce strict controls – nothing hidden in the cloud. Block open-sourced it to spur community collaboration on AI agents.

Continue is an open-source platform and IDE extension that has gained a lot of attention (20K+ GitHub stars by 2025) . It allows developers to create and share custom AI assistants that live in the IDE. Think of Continue as a framework: out-of-the-box it provides a VS Code and JetBrains plugin that can do code chat and completion using local or remote models. But it’s built to be highly configurable – developers can add “blocks” (pieces like prompts, rules, or integrations) and even create domain-specific agents. Continue’s recent 1.0 release introduced a hub where the community and companies can share their custom-built assistants and building blocks. This means an organization could, for example, create a specialized code assistant that knows about their internal libraries or coding style and share it to all devs via Continue. Notably, early enterprise users of Continue include companies like Siemens and Morningstar, indicating real-world viability. Being open-source, Continue can run fully in an enterprise environment, and it supports any model – local LLMs or cloud APIs – giving tremendous flexibility. As the founders put it, “the ‘one-size-fits-all’ AI code assistant will be a thing of the past” – Continue is about tailor-made AI for your team, rather than relying on a generic model only the provider can change.

Codeium is a distinctive AI code assistant that positions itself as an "open" alternative to proprietary solutions like GitHub Copilot. While not open-source in the traditional sense, it is free for individual developers and emphasizes privacy by not training on customer code. Developed by ex-Google engineers, Codeium offers plugins for numerous Integrated Development Environments (IDEs) and supports over 70 programming languages. In November 2024, Codeium introduced the Windsurf Editor, an AI-powered Integrated Development Environment (IDE) designed to enhance developer productivity by integrating advanced AI features directly into the coding workflow. For enterprises, Codeium provides self-hosted deployment options, allowing organizations to run the AI model within their own cloud infrastructure to maintain privacy.

In addition to full “assistant” frameworks, the open-source movement has produced many high-quality code-specialized models. Examples include StarCoder, CodeGen, PolyCoder, and Meta’s Code Llama. More recently, Alibaba released Qwen-14B-Coder (and iterative versions up to “Qwen 2.5 Coder”) which in late 2024 achieved top-tier code generation performance and was open for local use. There are also community-driven models like WizardCoder and Phind CodeLlama. An emerging trend is smaller models that are fine-tuned for specific languages or use cases, which organizations can run cheaply themselves. These models can plug into open-source agent frameworks like Continue or Aider. The likes of DeepSeek-R1 (a distilled model based on Qwen-14B, tailored for code) hint at a future where even mid-size models (10-15B parameters) perform impressively on code tasks. Open model availability gives enterprises an option to fully avoid external API calls – they can deploy these models on secured machines in a VPC, fulfilling the dream of “AI behind your firewall.”

Each approach has pros and cons. Here’s a side-by-side look at key considerations:

In practice, many enterprises adopt a hybrid approach. For instance, a team might use GitHub Copilot for general coding but employ an open-source tool like Aider for sensitive projects that cannot leave the intranet. Or use an open framework like Continue with both an internal model and occasionally route to an external API for particularly tough problems. The key is that open-source options provide leverage: they give enterprises bargaining power and technical options beyond what any single vendor offers. An open ecosystem also tends to innovate faster in niches – e.g., when a new programming language or framework arises, the community might build an AI helper for it before the big companies do.

Importantly, favoring open-source is not just a philosophical stance; it often yields practical benefits in security, cost, and flexibility. As Thoughtworks noted in their Technology Radar, open tools like Aider can directly edit multiple files across a codebase – a capability many closed tools lack – and since they run locally with your own API key, you pay only for the actual usage of the AI model, not a markup. This level of control and capability can be very attractive.

For enterprise leaders, the takeaway is: you have options. If vendor lock-in or data privacy is a concern, the open-route is viable and getting stronger every month. If immediate productivity out-of-the-box is paramount and you trust the vendor, the commercial products are mature and supported. Many organizations will mix and match to get the best of both worlds.

For teams looking to streamline this hybrid approach without the integration headaches, an operating system model—like Shakudo—makes it easy to deploy and manage both open and closed tools securely in your own environment. Everything works together out of the box, from AI coding assistant to local models and vector databases, so your team can stay focused on building.

Curious how it could fit into your stack? Book a demo or join our AI Workshop—a one-day session where we’ll help you map out the fastest path from proof of concept to real business value.