This article introduces five most distinguished LLMs that have made a considerable impact as of June 2023 - Falcon, MPT, FastChat-T5, OpenLLaMA, and RedPajama-INCITE. Each of these models has demonstrated significant technical prowess in terms of architecture, computational efficiency, versatility of use cases, and marked improvements in performance. For conversational, summarization, and text-related tasks, FastChat-T5, with 3 billion parameters, is the most cost-effective. It runs on an Nvidia T4 GPU and can be finetuned for downstream tasks. For advanced applications like code generation, reading comprehension, and problem-solving, Falcon and MPT with 7 billion parameters are recommended. They are hosted on an Nvidia A10G GPU with 20GB RAM. If more power is required, Falcon-40B and MPT-30B can be used, with MPT-30B requiring an Nvidia A100 GPU with 80GB RAM. Note that Falcon-40B doesn't fit in a single A100 GPU.

Language Models (LMs) are designed to estimate the distribution of words (tokens) in a text. Their primary goal is to predict the next token in a given sequence of tokens. For example, given the phrase "Hinton is a great _", an LM might predict the next token probabilities "human" (0.95), "scientist" (0.03), or "brain" (0.01), among others.

Large Language Models (LLMs) are neural network-based language models with over 1 billion parameters. By learning from vast quantities of text data, these models can mimic human behavior and perform various downstream tasks such as Question Answering, Summarization, Translation, and more.

LLMs commonly utilize the transformer architecture (Vaswani et. al, 2017), which introduced the concept of self-attention, making it suitable for parallelizable compute resources like GPUs and TPUs. To achieve a robust understanding of language, these models require pretraining on a large corpus of data. Once pretrained, LLMs can be fine-tuned on smaller datasets for specific downstream tasks.

Trained using bidirectional context, these models aim to establish strong representations of language. Since the model has a bidirectional context, they are pre-trained using the Masked Language Modeling (MLM) task, in which a portion of input tokens are masked, and the model is tasked with predicting the masked tokens. Text representations can be finetuned and used for downstream tasks like Classification, Question Answering, Named Entity Recognition etc. Some well-known encoder models that utilize this approach include BERT, RoBERTa, ALBERT, and DeBERTa.

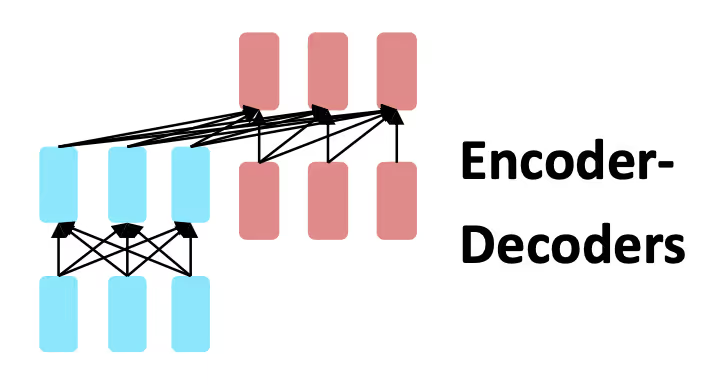

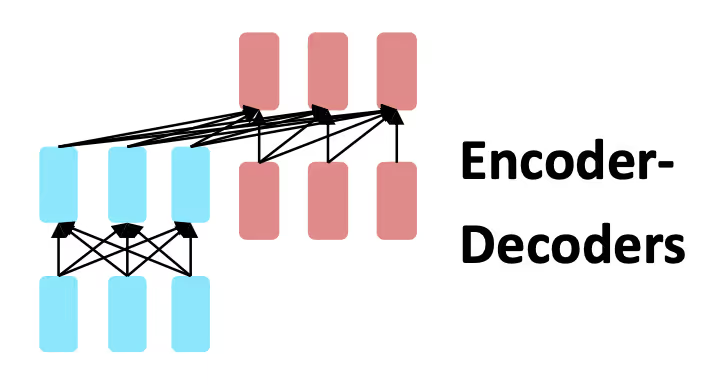

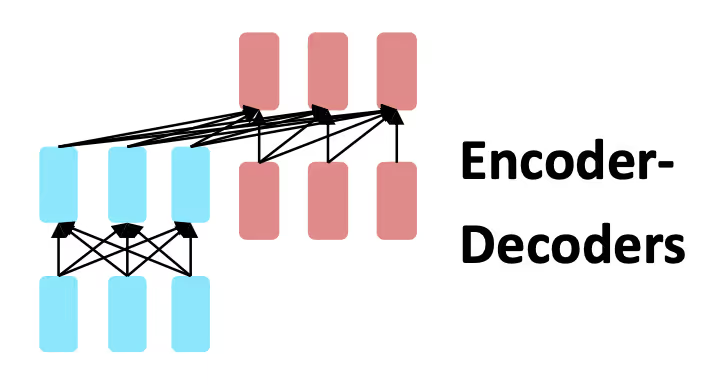

In these models, a prefix is given as input to the encoder, and the decoder predicts the output. The input utilizes bidirectional context. Studies have shown that corrupting spans of text and tasking the decoder with predicting the spans yields the best results (Rafael et al. 2019, and YiTay et al.2022). The T5 paper (Rafael et al. 2019) demonstrates models pretrained using span corruption and fine-tuned on various tasks, such as question answering, using 12 different fine-tuning tasks. The UL2 Paper (YiTay et al.2022) shows specific ways of denoising and choosing the span that unifies language learning.

These are the most common types of Language Models. They have a Causal LM objective to predict the full sequence length. These are commonly used for text-generation tasks. They can also be fine-tuned for downstream tasks by adding a classifier over the last token's hidden representation. OpenAI's GPT-1, GPT-2, and GPT-3 are examples of decoder models.

You can learn more about Pretraining Language Models from the CS224N lecture, T5 Paper (Raffel et al. 2019) and the UL2 paper (YiTay et al.2022)

Figure from (Yang et al. 2023) shows the evolution tree of LLMs over the years, We can see how encoder only architectures are deprecated and how quickly the field is evolving.

Open-source LLMs are flexible, allowing users to modify and tailor the models to their specific needs, boosting performance on unique data sets.

Users can modify and tailor the flexible structure of open-source LLMs to fit their specific needs, enhancing the performance on unique data sets.

Better data privacy is assured as open-source LLMs enable complete control over data, reducing the risk of data breaches.

Real-time user interaction applications can greatly benefit from the lower latencies offered by efficiently optimized and deployed open-source LLMs.

These topics are discussed in more detail in our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide”.

OpenLLM Leaderboard compares text-generative LLMs on different benchmarks.

In this section, we'll share all the essentials you'll need for the smooth and successful implementation of any of the top five commercially licensed LLMs in your business operations: Falcon-Series, MPT-Series, FastChat-T5, OpenLLaMA, and RedPajama.

* GPU Costs calculation based on fullstackdeeplearning.com estimates

Falcon LLM is a decoder-only large language model (LLM) developed by Abu Dhabi's Technology Innovation Institute (TII) and currently ranks first in the Hugging Face’s Open LLM LeaderBoard as of June 2023. Falcon Series consists of two models, Falcon-40B and Falcon-7B.

What sets Falcon apart is its training data. It has a unique data pipeline, developed with specialized tools, that extracts high-quality content with deduplication and filtering from web data, resulting in the RefinedWeb dataset. Falcon also uses multi-query attention, sharing the key and value pairs across the attention heads. This improves the scalability of inference.

Falcon model was trained in May 2023 and is fully open-source under Apache License 2.0.

The Falcon-40B is trained on 1.5 trillion tokens. It is a standout performer, using only about 75% of GPT-3's training compute budget. It required 384 GPUs on AWS and over two months of training. The model is offered in two versions: one with 40 billion parameters and a lighter one with 7 billion parameters, offering flexibility depending on available hardware capacity.

Falcon LLM can be readily used for various applications such as generating human-like text, answering questions, and translating languages. The hugging face model page shows how to use it for inference. We have also shown the usage of Falcon-7B in a previous Shakudo tutorial on PDF QA with Open Source Models.

For inference, Falcon 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). Falcon 40B doesn’t fit in a single A100 with 80GB RAM. However, an 8-bit model of Falcon 40B can fit into a GPU of 45 GB RAM.

Falcon LLM underscores the paradigm shift in the LLM field. Despite the general trend towards increasingly larger models, Falcon proves that a strategic focus on high-quality training data and optimal architecture can enhance performance and significantly reduce computational demands. This shift, as demonstrated by Falcon, is likely to inform the direction of future LLM advancements, particularly in light of growing computational capacity challenges.

You can learn more about Falcon from the RefinedWeb paper and Huggingface blog.

MPT series are decoder-only large language models developed by MosaicML. The models have been trained on a diverse dataset of 1 trillion tokens, covering natural language text, code, and scientific text to ensure versatility in its applications. MPT models support larger contexts during inference with the help of ALiBi in place of positional embeddings. They are fully open-source under Apache License 2.0 and CC BY-SA-3.0.

MPT-30B is trained with an 8k context length on 256xH100s, and it is the first publicly known LLM trained on NVIDIA H100 GPUs. MPT-7B was released one month before MPT-30B and required a substantial computational infrastructure for its training. The 7B base model was trained on a setup involving 440xA100-40GB GPUs, taking approximately 9.5 days and costing around $200k. However, the subsequent fine-tuned versions of the model, specifically MPT-7B-Instruct and MPT-7B-Chat, were significantly less resource-intensive, costing between a few hundred to a few thousand dollars each.

MPT models come in two distinctive versions - MPT-Instruct and MPT-Chat. MPT-Instruct is designed to be a task-oriented model, making it highly useful for applications that require instruction-following or question-answering, such as Q&A systems or instructional guides. On the other hand, MPT-Chat aims to provide a seamless conversational experience, making it a great fit for applications like chatbots, virtual assistants, or any other interactive user engagement tools.

For inference, 16 Bit MPT-30B can be fit into one A100 GPU with RAM of 80 GB. 8 Bit MPT-30B can be fit into one A100 GPU with RAM of 40 GB. MPT-7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). The hugging face model pages show how to use MPT for inference.

The model's optimized layers, including the FlashAttention and low-precision layer norm, offer an out-of-the-box performance that is 1.5x-2x faster than other comparable 7B models. This results in faster and more efficient inference pipelines, enhancing its usability in real-world applications. Moreover, the MPT weights can be directly ported to FasterTransformer or ONNX for those seeking optimal performance.

You can learn more about MPT from the MPT-30B blog and MPT-7B blog

FastChat-T5 is a chatbot model developed by the FastChat team through fine-tuning the Flan-T5-XL model, a large transformer model with 3 billion parameters. The model's primary function is to generate responses to user inputs autoregressively. The underpinning architecture for FastChat-T5 is an encoder-decoder transformer model. It was trained in April 2023 and is fully open-source under Apache License 2.0.

FastChat-T5 was trained using approximately 70,000 user-shared conversations from sharegpt.com. The model interprets the ShareGPT data in a question-answering format, where each response from ChatGPT is treated as an answer, and previous conversations between the user and ChatGPT are processed as a question. FastChat-T5's encoder bi-directionally encodes a question into a hidden representation, and the decoder uses cross-attention to focus on this representation while generating an answer unidirectionally from a start token.

FastChat-T5's primary purpose is for commercial applications of large language models and chatbots. Due to its advanced features and capabilities, it is particularly suited for applications that demand sophisticated language understanding and generation, such as customer support systems, interactive platforms, virtual assistants, and more. It can also serve as a valuable resource for researchers and practitioners in natural language processing, machine learning, and artificial intelligence, offering insights into developing and performing high-quality conversational AI systems.

For inference, the FastChat-T5 model can be fit into a GPU of 15GB RAM.

FastChat-T5 can be loaded with hugging face pipelines using the text2text-generation task. It was shown in a previous Shakudo tutorial on PDF QA with Open Source Models. FastChat-T5 is also part of the FastChat open platform for training, serving, and evaluating large language model-based chatbots.

OpenLLaMA is an open-source reproduction of Meta AI's LLaMA large language model developed by Berkeley AI Research. The project provides permissively licensed models with 3B, 7B, and 13B parameters, trained on 1 trillion tokens. The models are based on the transformer architecture with various improvements and trained on the RedPajama dataset, a reproduction of the LLaMA training dataset. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

OpenLLaMA models are trained on cloud TPU-v4s using EasyLM, a JAX-based training pipeline developed for training and fine-tuning large language models. The training process combines normal and fully sharded data parallelism to balance the training throughput and memory usage. The 7B model achieves a throughput of over 2200 tokens per second per TPU-v4 chip.

OpenLLaMA models have been evaluated on various tasks using the lm-evaluation-harness. The models perform comparably to the original LLaMA and GPT-J across most tasks and outperform them in some tasks. However, due to the tokenizer's configuration, the models are currently unsuitable for code generation tasks involving many empty spaces. The developers plan to open source long context models trained on more code data.

For inference, OpenLLaMA 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G).

OpenLLaMA models can be integrated with existing implementations as drop-in replacements for LLaMA. The models are available in PyTorch and JAX weights, which can be loaded using the Hugging Face transformers library or the EasyLM framework. The models have been evaluated against the original LLaMA models and show comparable performance across various tasks. The developers have also provided a smaller 3B variant of the LLaMA model. The OpenLLaMA project is under active development, with regular updates and improvements being released.

The RedPajama-INCITE-7B-Base is a 6.9B parameter pre-trained language model developed by Together Computer in collaboration with several institutions and is based on the Decoder Transformer architecture. This model was trained on the RedPajama dataset with 1 trillion tokens used. Apart from the base version, there are two specialized versions for instruction tuning (RedPajama-INCITE-7B-Instruct) and chat applications (RedPajama-INCITE-7B-Chat), ensuring a versatile performance across diverse applications. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

Training for RedPajama-INCITE-7B-Base was conducted as part of the INCITE 2023 project on Scalable Foundation Models for Transferable Generalist AI, leveraging a robust infrastructure that included 3,072 V100 GPUs. The data used for training was extracted from togethercomputer/RedPajama-Data-1T. The computational setup involved 512 nodes of 6xV100 (IBM Power9) on the OLCF Summit cluster, using the Apex FusedAdam optimizer.

The potential use cases for RedPajama-INCITE-7B-Base are vast, mainly within the domain of language modeling. Whether it's used for enhancing human-computer interactions, generating meaningful and coherent content, or offering natural language understanding for various applications, this model is equipped to handle it. However, it is worth noting that while it is designed to be versatile, it might not perform adequately in safety-critical applications or decisions with substantial social impact, like any other LLM.

The model can run on a GPU with 16GB memory (base inference), 12GB memory (int8 inference), or even on a CPU. The hugging face model page shows how to load the models for inference. Integration of RedPajama-INCITE-7B-Base into applications is straightforward with the provided Python instructions. However, it requires the transformers library of version 4.25.1 or higher. The model has also been optimized to support int8 operations, which can offer significant speedups while maintaining similar levels of accuracy as FP32 operations. It also comes with a procedure for CPU inference, using bfloat16 for LayerNormKernelImpl as fp16 is not implemented for CPU. These architectural improvements underscore the model's commitment to achieving better performance without compromising efficiency.

To learn more about RedPajama-Incite, check out together.xyz’s blog and corresponding hugging face model page.

The analysis of these models underscores a growing trend toward interoperability and ease of integration. A notable example is their compatibility with widespread AI ecosystems, such as HuggingFace. Each model manifests a distinct architectural layout, computational requisites, and potential applications, reflecting the vast diversity in AI advancements.

For those interested in practical applications of these advanced models, explore our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide” which provides hands-on experience in implementing these models. The Shakudo platform can help you get your AI products to production easily, quickly and securely. For a first-hand experience of our platform, we encourage you to contact our team and Book a demo.

This article introduces five most distinguished LLMs that have made a considerable impact as of June 2023 - Falcon, MPT, FastChat-T5, OpenLLaMA, and RedPajama-INCITE. Each of these models has demonstrated significant technical prowess in terms of architecture, computational efficiency, versatility of use cases, and marked improvements in performance. For conversational, summarization, and text-related tasks, FastChat-T5, with 3 billion parameters, is the most cost-effective. It runs on an Nvidia T4 GPU and can be finetuned for downstream tasks. For advanced applications like code generation, reading comprehension, and problem-solving, Falcon and MPT with 7 billion parameters are recommended. They are hosted on an Nvidia A10G GPU with 20GB RAM. If more power is required, Falcon-40B and MPT-30B can be used, with MPT-30B requiring an Nvidia A100 GPU with 80GB RAM. Note that Falcon-40B doesn't fit in a single A100 GPU.

Language Models (LMs) are designed to estimate the distribution of words (tokens) in a text. Their primary goal is to predict the next token in a given sequence of tokens. For example, given the phrase "Hinton is a great _", an LM might predict the next token probabilities "human" (0.95), "scientist" (0.03), or "brain" (0.01), among others.

Large Language Models (LLMs) are neural network-based language models with over 1 billion parameters. By learning from vast quantities of text data, these models can mimic human behavior and perform various downstream tasks such as Question Answering, Summarization, Translation, and more.

LLMs commonly utilize the transformer architecture (Vaswani et. al, 2017), which introduced the concept of self-attention, making it suitable for parallelizable compute resources like GPUs and TPUs. To achieve a robust understanding of language, these models require pretraining on a large corpus of data. Once pretrained, LLMs can be fine-tuned on smaller datasets for specific downstream tasks.

Trained using bidirectional context, these models aim to establish strong representations of language. Since the model has a bidirectional context, they are pre-trained using the Masked Language Modeling (MLM) task, in which a portion of input tokens are masked, and the model is tasked with predicting the masked tokens. Text representations can be finetuned and used for downstream tasks like Classification, Question Answering, Named Entity Recognition etc. Some well-known encoder models that utilize this approach include BERT, RoBERTa, ALBERT, and DeBERTa.

In these models, a prefix is given as input to the encoder, and the decoder predicts the output. The input utilizes bidirectional context. Studies have shown that corrupting spans of text and tasking the decoder with predicting the spans yields the best results (Rafael et al. 2019, and YiTay et al.2022). The T5 paper (Rafael et al. 2019) demonstrates models pretrained using span corruption and fine-tuned on various tasks, such as question answering, using 12 different fine-tuning tasks. The UL2 Paper (YiTay et al.2022) shows specific ways of denoising and choosing the span that unifies language learning.

These are the most common types of Language Models. They have a Causal LM objective to predict the full sequence length. These are commonly used for text-generation tasks. They can also be fine-tuned for downstream tasks by adding a classifier over the last token's hidden representation. OpenAI's GPT-1, GPT-2, and GPT-3 are examples of decoder models.

You can learn more about Pretraining Language Models from the CS224N lecture, T5 Paper (Raffel et al. 2019) and the UL2 paper (YiTay et al.2022)

Figure from (Yang et al. 2023) shows the evolution tree of LLMs over the years, We can see how encoder only architectures are deprecated and how quickly the field is evolving.

Open-source LLMs are flexible, allowing users to modify and tailor the models to their specific needs, boosting performance on unique data sets.

Users can modify and tailor the flexible structure of open-source LLMs to fit their specific needs, enhancing the performance on unique data sets.

Better data privacy is assured as open-source LLMs enable complete control over data, reducing the risk of data breaches.

Real-time user interaction applications can greatly benefit from the lower latencies offered by efficiently optimized and deployed open-source LLMs.

These topics are discussed in more detail in our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide”.

OpenLLM Leaderboard compares text-generative LLMs on different benchmarks.

In this section, we'll share all the essentials you'll need for the smooth and successful implementation of any of the top five commercially licensed LLMs in your business operations: Falcon-Series, MPT-Series, FastChat-T5, OpenLLaMA, and RedPajama.

* GPU Costs calculation based on fullstackdeeplearning.com estimates

Falcon LLM is a decoder-only large language model (LLM) developed by Abu Dhabi's Technology Innovation Institute (TII) and currently ranks first in the Hugging Face’s Open LLM LeaderBoard as of June 2023. Falcon Series consists of two models, Falcon-40B and Falcon-7B.

What sets Falcon apart is its training data. It has a unique data pipeline, developed with specialized tools, that extracts high-quality content with deduplication and filtering from web data, resulting in the RefinedWeb dataset. Falcon also uses multi-query attention, sharing the key and value pairs across the attention heads. This improves the scalability of inference.

Falcon model was trained in May 2023 and is fully open-source under Apache License 2.0.

The Falcon-40B is trained on 1.5 trillion tokens. It is a standout performer, using only about 75% of GPT-3's training compute budget. It required 384 GPUs on AWS and over two months of training. The model is offered in two versions: one with 40 billion parameters and a lighter one with 7 billion parameters, offering flexibility depending on available hardware capacity.

Falcon LLM can be readily used for various applications such as generating human-like text, answering questions, and translating languages. The hugging face model page shows how to use it for inference. We have also shown the usage of Falcon-7B in a previous Shakudo tutorial on PDF QA with Open Source Models.

For inference, Falcon 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). Falcon 40B doesn’t fit in a single A100 with 80GB RAM. However, an 8-bit model of Falcon 40B can fit into a GPU of 45 GB RAM.

Falcon LLM underscores the paradigm shift in the LLM field. Despite the general trend towards increasingly larger models, Falcon proves that a strategic focus on high-quality training data and optimal architecture can enhance performance and significantly reduce computational demands. This shift, as demonstrated by Falcon, is likely to inform the direction of future LLM advancements, particularly in light of growing computational capacity challenges.

You can learn more about Falcon from the RefinedWeb paper and Huggingface blog.

MPT series are decoder-only large language models developed by MosaicML. The models have been trained on a diverse dataset of 1 trillion tokens, covering natural language text, code, and scientific text to ensure versatility in its applications. MPT models support larger contexts during inference with the help of ALiBi in place of positional embeddings. They are fully open-source under Apache License 2.0 and CC BY-SA-3.0.

MPT-30B is trained with an 8k context length on 256xH100s, and it is the first publicly known LLM trained on NVIDIA H100 GPUs. MPT-7B was released one month before MPT-30B and required a substantial computational infrastructure for its training. The 7B base model was trained on a setup involving 440xA100-40GB GPUs, taking approximately 9.5 days and costing around $200k. However, the subsequent fine-tuned versions of the model, specifically MPT-7B-Instruct and MPT-7B-Chat, were significantly less resource-intensive, costing between a few hundred to a few thousand dollars each.

MPT models come in two distinctive versions - MPT-Instruct and MPT-Chat. MPT-Instruct is designed to be a task-oriented model, making it highly useful for applications that require instruction-following or question-answering, such as Q&A systems or instructional guides. On the other hand, MPT-Chat aims to provide a seamless conversational experience, making it a great fit for applications like chatbots, virtual assistants, or any other interactive user engagement tools.

For inference, 16 Bit MPT-30B can be fit into one A100 GPU with RAM of 80 GB. 8 Bit MPT-30B can be fit into one A100 GPU with RAM of 40 GB. MPT-7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). The hugging face model pages show how to use MPT for inference.

The model's optimized layers, including the FlashAttention and low-precision layer norm, offer an out-of-the-box performance that is 1.5x-2x faster than other comparable 7B models. This results in faster and more efficient inference pipelines, enhancing its usability in real-world applications. Moreover, the MPT weights can be directly ported to FasterTransformer or ONNX for those seeking optimal performance.

You can learn more about MPT from the MPT-30B blog and MPT-7B blog

FastChat-T5 is a chatbot model developed by the FastChat team through fine-tuning the Flan-T5-XL model, a large transformer model with 3 billion parameters. The model's primary function is to generate responses to user inputs autoregressively. The underpinning architecture for FastChat-T5 is an encoder-decoder transformer model. It was trained in April 2023 and is fully open-source under Apache License 2.0.

FastChat-T5 was trained using approximately 70,000 user-shared conversations from sharegpt.com. The model interprets the ShareGPT data in a question-answering format, where each response from ChatGPT is treated as an answer, and previous conversations between the user and ChatGPT are processed as a question. FastChat-T5's encoder bi-directionally encodes a question into a hidden representation, and the decoder uses cross-attention to focus on this representation while generating an answer unidirectionally from a start token.

FastChat-T5's primary purpose is for commercial applications of large language models and chatbots. Due to its advanced features and capabilities, it is particularly suited for applications that demand sophisticated language understanding and generation, such as customer support systems, interactive platforms, virtual assistants, and more. It can also serve as a valuable resource for researchers and practitioners in natural language processing, machine learning, and artificial intelligence, offering insights into developing and performing high-quality conversational AI systems.

For inference, the FastChat-T5 model can be fit into a GPU of 15GB RAM.

FastChat-T5 can be loaded with hugging face pipelines using the text2text-generation task. It was shown in a previous Shakudo tutorial on PDF QA with Open Source Models. FastChat-T5 is also part of the FastChat open platform for training, serving, and evaluating large language model-based chatbots.

OpenLLaMA is an open-source reproduction of Meta AI's LLaMA large language model developed by Berkeley AI Research. The project provides permissively licensed models with 3B, 7B, and 13B parameters, trained on 1 trillion tokens. The models are based on the transformer architecture with various improvements and trained on the RedPajama dataset, a reproduction of the LLaMA training dataset. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

OpenLLaMA models are trained on cloud TPU-v4s using EasyLM, a JAX-based training pipeline developed for training and fine-tuning large language models. The training process combines normal and fully sharded data parallelism to balance the training throughput and memory usage. The 7B model achieves a throughput of over 2200 tokens per second per TPU-v4 chip.

OpenLLaMA models have been evaluated on various tasks using the lm-evaluation-harness. The models perform comparably to the original LLaMA and GPT-J across most tasks and outperform them in some tasks. However, due to the tokenizer's configuration, the models are currently unsuitable for code generation tasks involving many empty spaces. The developers plan to open source long context models trained on more code data.

For inference, OpenLLaMA 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G).

OpenLLaMA models can be integrated with existing implementations as drop-in replacements for LLaMA. The models are available in PyTorch and JAX weights, which can be loaded using the Hugging Face transformers library or the EasyLM framework. The models have been evaluated against the original LLaMA models and show comparable performance across various tasks. The developers have also provided a smaller 3B variant of the LLaMA model. The OpenLLaMA project is under active development, with regular updates and improvements being released.

The RedPajama-INCITE-7B-Base is a 6.9B parameter pre-trained language model developed by Together Computer in collaboration with several institutions and is based on the Decoder Transformer architecture. This model was trained on the RedPajama dataset with 1 trillion tokens used. Apart from the base version, there are two specialized versions for instruction tuning (RedPajama-INCITE-7B-Instruct) and chat applications (RedPajama-INCITE-7B-Chat), ensuring a versatile performance across diverse applications. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

Training for RedPajama-INCITE-7B-Base was conducted as part of the INCITE 2023 project on Scalable Foundation Models for Transferable Generalist AI, leveraging a robust infrastructure that included 3,072 V100 GPUs. The data used for training was extracted from togethercomputer/RedPajama-Data-1T. The computational setup involved 512 nodes of 6xV100 (IBM Power9) on the OLCF Summit cluster, using the Apex FusedAdam optimizer.

The potential use cases for RedPajama-INCITE-7B-Base are vast, mainly within the domain of language modeling. Whether it's used for enhancing human-computer interactions, generating meaningful and coherent content, or offering natural language understanding for various applications, this model is equipped to handle it. However, it is worth noting that while it is designed to be versatile, it might not perform adequately in safety-critical applications or decisions with substantial social impact, like any other LLM.

The model can run on a GPU with 16GB memory (base inference), 12GB memory (int8 inference), or even on a CPU. The hugging face model page shows how to load the models for inference. Integration of RedPajama-INCITE-7B-Base into applications is straightforward with the provided Python instructions. However, it requires the transformers library of version 4.25.1 or higher. The model has also been optimized to support int8 operations, which can offer significant speedups while maintaining similar levels of accuracy as FP32 operations. It also comes with a procedure for CPU inference, using bfloat16 for LayerNormKernelImpl as fp16 is not implemented for CPU. These architectural improvements underscore the model's commitment to achieving better performance without compromising efficiency.

To learn more about RedPajama-Incite, check out together.xyz’s blog and corresponding hugging face model page.

The analysis of these models underscores a growing trend toward interoperability and ease of integration. A notable example is their compatibility with widespread AI ecosystems, such as HuggingFace. Each model manifests a distinct architectural layout, computational requisites, and potential applications, reflecting the vast diversity in AI advancements.

For those interested in practical applications of these advanced models, explore our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide” which provides hands-on experience in implementing these models. The Shakudo platform can help you get your AI products to production easily, quickly and securely. For a first-hand experience of our platform, we encourage you to contact our team and Book a demo.

This article introduces five most distinguished LLMs that have made a considerable impact as of June 2023 - Falcon, MPT, FastChat-T5, OpenLLaMA, and RedPajama-INCITE. Each of these models has demonstrated significant technical prowess in terms of architecture, computational efficiency, versatility of use cases, and marked improvements in performance. For conversational, summarization, and text-related tasks, FastChat-T5, with 3 billion parameters, is the most cost-effective. It runs on an Nvidia T4 GPU and can be finetuned for downstream tasks. For advanced applications like code generation, reading comprehension, and problem-solving, Falcon and MPT with 7 billion parameters are recommended. They are hosted on an Nvidia A10G GPU with 20GB RAM. If more power is required, Falcon-40B and MPT-30B can be used, with MPT-30B requiring an Nvidia A100 GPU with 80GB RAM. Note that Falcon-40B doesn't fit in a single A100 GPU.

Language Models (LMs) are designed to estimate the distribution of words (tokens) in a text. Their primary goal is to predict the next token in a given sequence of tokens. For example, given the phrase "Hinton is a great _", an LM might predict the next token probabilities "human" (0.95), "scientist" (0.03), or "brain" (0.01), among others.

Large Language Models (LLMs) are neural network-based language models with over 1 billion parameters. By learning from vast quantities of text data, these models can mimic human behavior and perform various downstream tasks such as Question Answering, Summarization, Translation, and more.

LLMs commonly utilize the transformer architecture (Vaswani et. al, 2017), which introduced the concept of self-attention, making it suitable for parallelizable compute resources like GPUs and TPUs. To achieve a robust understanding of language, these models require pretraining on a large corpus of data. Once pretrained, LLMs can be fine-tuned on smaller datasets for specific downstream tasks.

Trained using bidirectional context, these models aim to establish strong representations of language. Since the model has a bidirectional context, they are pre-trained using the Masked Language Modeling (MLM) task, in which a portion of input tokens are masked, and the model is tasked with predicting the masked tokens. Text representations can be finetuned and used for downstream tasks like Classification, Question Answering, Named Entity Recognition etc. Some well-known encoder models that utilize this approach include BERT, RoBERTa, ALBERT, and DeBERTa.

In these models, a prefix is given as input to the encoder, and the decoder predicts the output. The input utilizes bidirectional context. Studies have shown that corrupting spans of text and tasking the decoder with predicting the spans yields the best results (Rafael et al. 2019, and YiTay et al.2022). The T5 paper (Rafael et al. 2019) demonstrates models pretrained using span corruption and fine-tuned on various tasks, such as question answering, using 12 different fine-tuning tasks. The UL2 Paper (YiTay et al.2022) shows specific ways of denoising and choosing the span that unifies language learning.

These are the most common types of Language Models. They have a Causal LM objective to predict the full sequence length. These are commonly used for text-generation tasks. They can also be fine-tuned for downstream tasks by adding a classifier over the last token's hidden representation. OpenAI's GPT-1, GPT-2, and GPT-3 are examples of decoder models.

You can learn more about Pretraining Language Models from the CS224N lecture, T5 Paper (Raffel et al. 2019) and the UL2 paper (YiTay et al.2022)

Figure from (Yang et al. 2023) shows the evolution tree of LLMs over the years, We can see how encoder only architectures are deprecated and how quickly the field is evolving.

Open-source LLMs are flexible, allowing users to modify and tailor the models to their specific needs, boosting performance on unique data sets.

Users can modify and tailor the flexible structure of open-source LLMs to fit their specific needs, enhancing the performance on unique data sets.

Better data privacy is assured as open-source LLMs enable complete control over data, reducing the risk of data breaches.

Real-time user interaction applications can greatly benefit from the lower latencies offered by efficiently optimized and deployed open-source LLMs.

These topics are discussed in more detail in our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide”.

OpenLLM Leaderboard compares text-generative LLMs on different benchmarks.

In this section, we'll share all the essentials you'll need for the smooth and successful implementation of any of the top five commercially licensed LLMs in your business operations: Falcon-Series, MPT-Series, FastChat-T5, OpenLLaMA, and RedPajama.

* GPU Costs calculation based on fullstackdeeplearning.com estimates

Falcon LLM is a decoder-only large language model (LLM) developed by Abu Dhabi's Technology Innovation Institute (TII) and currently ranks first in the Hugging Face’s Open LLM LeaderBoard as of June 2023. Falcon Series consists of two models, Falcon-40B and Falcon-7B.

What sets Falcon apart is its training data. It has a unique data pipeline, developed with specialized tools, that extracts high-quality content with deduplication and filtering from web data, resulting in the RefinedWeb dataset. Falcon also uses multi-query attention, sharing the key and value pairs across the attention heads. This improves the scalability of inference.

Falcon model was trained in May 2023 and is fully open-source under Apache License 2.0.

The Falcon-40B is trained on 1.5 trillion tokens. It is a standout performer, using only about 75% of GPT-3's training compute budget. It required 384 GPUs on AWS and over two months of training. The model is offered in two versions: one with 40 billion parameters and a lighter one with 7 billion parameters, offering flexibility depending on available hardware capacity.

Falcon LLM can be readily used for various applications such as generating human-like text, answering questions, and translating languages. The hugging face model page shows how to use it for inference. We have also shown the usage of Falcon-7B in a previous Shakudo tutorial on PDF QA with Open Source Models.

For inference, Falcon 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). Falcon 40B doesn’t fit in a single A100 with 80GB RAM. However, an 8-bit model of Falcon 40B can fit into a GPU of 45 GB RAM.

Falcon LLM underscores the paradigm shift in the LLM field. Despite the general trend towards increasingly larger models, Falcon proves that a strategic focus on high-quality training data and optimal architecture can enhance performance and significantly reduce computational demands. This shift, as demonstrated by Falcon, is likely to inform the direction of future LLM advancements, particularly in light of growing computational capacity challenges.

You can learn more about Falcon from the RefinedWeb paper and Huggingface blog.

MPT series are decoder-only large language models developed by MosaicML. The models have been trained on a diverse dataset of 1 trillion tokens, covering natural language text, code, and scientific text to ensure versatility in its applications. MPT models support larger contexts during inference with the help of ALiBi in place of positional embeddings. They are fully open-source under Apache License 2.0 and CC BY-SA-3.0.

MPT-30B is trained with an 8k context length on 256xH100s, and it is the first publicly known LLM trained on NVIDIA H100 GPUs. MPT-7B was released one month before MPT-30B and required a substantial computational infrastructure for its training. The 7B base model was trained on a setup involving 440xA100-40GB GPUs, taking approximately 9.5 days and costing around $200k. However, the subsequent fine-tuned versions of the model, specifically MPT-7B-Instruct and MPT-7B-Chat, were significantly less resource-intensive, costing between a few hundred to a few thousand dollars each.

MPT models come in two distinctive versions - MPT-Instruct and MPT-Chat. MPT-Instruct is designed to be a task-oriented model, making it highly useful for applications that require instruction-following or question-answering, such as Q&A systems or instructional guides. On the other hand, MPT-Chat aims to provide a seamless conversational experience, making it a great fit for applications like chatbots, virtual assistants, or any other interactive user engagement tools.

For inference, 16 Bit MPT-30B can be fit into one A100 GPU with RAM of 80 GB. 8 Bit MPT-30B can be fit into one A100 GPU with RAM of 40 GB. MPT-7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G). The hugging face model pages show how to use MPT for inference.

The model's optimized layers, including the FlashAttention and low-precision layer norm, offer an out-of-the-box performance that is 1.5x-2x faster than other comparable 7B models. This results in faster and more efficient inference pipelines, enhancing its usability in real-world applications. Moreover, the MPT weights can be directly ported to FasterTransformer or ONNX for those seeking optimal performance.

You can learn more about MPT from the MPT-30B blog and MPT-7B blog

FastChat-T5 is a chatbot model developed by the FastChat team through fine-tuning the Flan-T5-XL model, a large transformer model with 3 billion parameters. The model's primary function is to generate responses to user inputs autoregressively. The underpinning architecture for FastChat-T5 is an encoder-decoder transformer model. It was trained in April 2023 and is fully open-source under Apache License 2.0.

FastChat-T5 was trained using approximately 70,000 user-shared conversations from sharegpt.com. The model interprets the ShareGPT data in a question-answering format, where each response from ChatGPT is treated as an answer, and previous conversations between the user and ChatGPT are processed as a question. FastChat-T5's encoder bi-directionally encodes a question into a hidden representation, and the decoder uses cross-attention to focus on this representation while generating an answer unidirectionally from a start token.

FastChat-T5's primary purpose is for commercial applications of large language models and chatbots. Due to its advanced features and capabilities, it is particularly suited for applications that demand sophisticated language understanding and generation, such as customer support systems, interactive platforms, virtual assistants, and more. It can also serve as a valuable resource for researchers and practitioners in natural language processing, machine learning, and artificial intelligence, offering insights into developing and performing high-quality conversational AI systems.

For inference, the FastChat-T5 model can be fit into a GPU of 15GB RAM.

FastChat-T5 can be loaded with hugging face pipelines using the text2text-generation task. It was shown in a previous Shakudo tutorial on PDF QA with Open Source Models. FastChat-T5 is also part of the FastChat open platform for training, serving, and evaluating large language model-based chatbots.

OpenLLaMA is an open-source reproduction of Meta AI's LLaMA large language model developed by Berkeley AI Research. The project provides permissively licensed models with 3B, 7B, and 13B parameters, trained on 1 trillion tokens. The models are based on the transformer architecture with various improvements and trained on the RedPajama dataset, a reproduction of the LLaMA training dataset. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

OpenLLaMA models are trained on cloud TPU-v4s using EasyLM, a JAX-based training pipeline developed for training and fine-tuning large language models. The training process combines normal and fully sharded data parallelism to balance the training throughput and memory usage. The 7B model achieves a throughput of over 2200 tokens per second per TPU-v4 chip.

OpenLLaMA models have been evaluated on various tasks using the lm-evaluation-harness. The models perform comparably to the original LLaMA and GPT-J across most tasks and outperform them in some tasks. However, due to the tokenizer's configuration, the models are currently unsuitable for code generation tasks involving many empty spaces. The developers plan to open source long context models trained on more code data.

For inference, OpenLLaMA 7B can be fit into a GPU with RAM of 15-20 GB (1 Nvidia A10G).

OpenLLaMA models can be integrated with existing implementations as drop-in replacements for LLaMA. The models are available in PyTorch and JAX weights, which can be loaded using the Hugging Face transformers library or the EasyLM framework. The models have been evaluated against the original LLaMA models and show comparable performance across various tasks. The developers have also provided a smaller 3B variant of the LLaMA model. The OpenLLaMA project is under active development, with regular updates and improvements being released.

The RedPajama-INCITE-7B-Base is a 6.9B parameter pre-trained language model developed by Together Computer in collaboration with several institutions and is based on the Decoder Transformer architecture. This model was trained on the RedPajama dataset with 1 trillion tokens used. Apart from the base version, there are two specialized versions for instruction tuning (RedPajama-INCITE-7B-Instruct) and chat applications (RedPajama-INCITE-7B-Chat), ensuring a versatile performance across diverse applications. The model was trained in May 2023 and is fully open-source under Apache License 2.0.

Training for RedPajama-INCITE-7B-Base was conducted as part of the INCITE 2023 project on Scalable Foundation Models for Transferable Generalist AI, leveraging a robust infrastructure that included 3,072 V100 GPUs. The data used for training was extracted from togethercomputer/RedPajama-Data-1T. The computational setup involved 512 nodes of 6xV100 (IBM Power9) on the OLCF Summit cluster, using the Apex FusedAdam optimizer.

The potential use cases for RedPajama-INCITE-7B-Base are vast, mainly within the domain of language modeling. Whether it's used for enhancing human-computer interactions, generating meaningful and coherent content, or offering natural language understanding for various applications, this model is equipped to handle it. However, it is worth noting that while it is designed to be versatile, it might not perform adequately in safety-critical applications or decisions with substantial social impact, like any other LLM.

The model can run on a GPU with 16GB memory (base inference), 12GB memory (int8 inference), or even on a CPU. The hugging face model page shows how to load the models for inference. Integration of RedPajama-INCITE-7B-Base into applications is straightforward with the provided Python instructions. However, it requires the transformers library of version 4.25.1 or higher. The model has also been optimized to support int8 operations, which can offer significant speedups while maintaining similar levels of accuracy as FP32 operations. It also comes with a procedure for CPU inference, using bfloat16 for LayerNormKernelImpl as fp16 is not implemented for CPU. These architectural improvements underscore the model's commitment to achieving better performance without compromising efficiency.

To learn more about RedPajama-Incite, check out together.xyz’s blog and corresponding hugging face model page.

The analysis of these models underscores a growing trend toward interoperability and ease of integration. A notable example is their compatibility with widespread AI ecosystems, such as HuggingFace. Each model manifests a distinct architectural layout, computational requisites, and potential applications, reflecting the vast diversity in AI advancements.

For those interested in practical applications of these advanced models, explore our blog post “Building a PDF Knowledge Bot With Open-Source LLMs - A Step-by-Step Guide” which provides hands-on experience in implementing these models. The Shakudo platform can help you get your AI products to production easily, quickly and securely. For a first-hand experience of our platform, we encourage you to contact our team and Book a demo.