If we had to choose one word to describe the rapid evolution of AI today, it would probably be something along the lines of explosive. As predicted by the Market Research Future report, the large language model (LLM) market in North America alone is expected to reach $105.5 billion by 2030. The exponential growth of AI tools combined with access to massive troves of text data has opened gates for better and more advanced content generation than we had ever hoped. Yet, such rapid expansion also makes it harder than ever to navigate and select the right tools among the diverse LLM models available.

The goal of this post is to keep you, the AI enthusiast and professional, up-to-date with current trends and essential innovations in the field. Below, we highlighted the top 9 LLMs that we think are currently making waves in the industry, each with distinct capabilities and specialized strengths, excelling in areas such as natural language processing, code synthesis, few-shot learning, or scalability. While we believe there is no one-size-fits-all LLM for every use case, we hope that this list can help you identify the most current and well-suited LLM model that meets your business’s unique requirements.

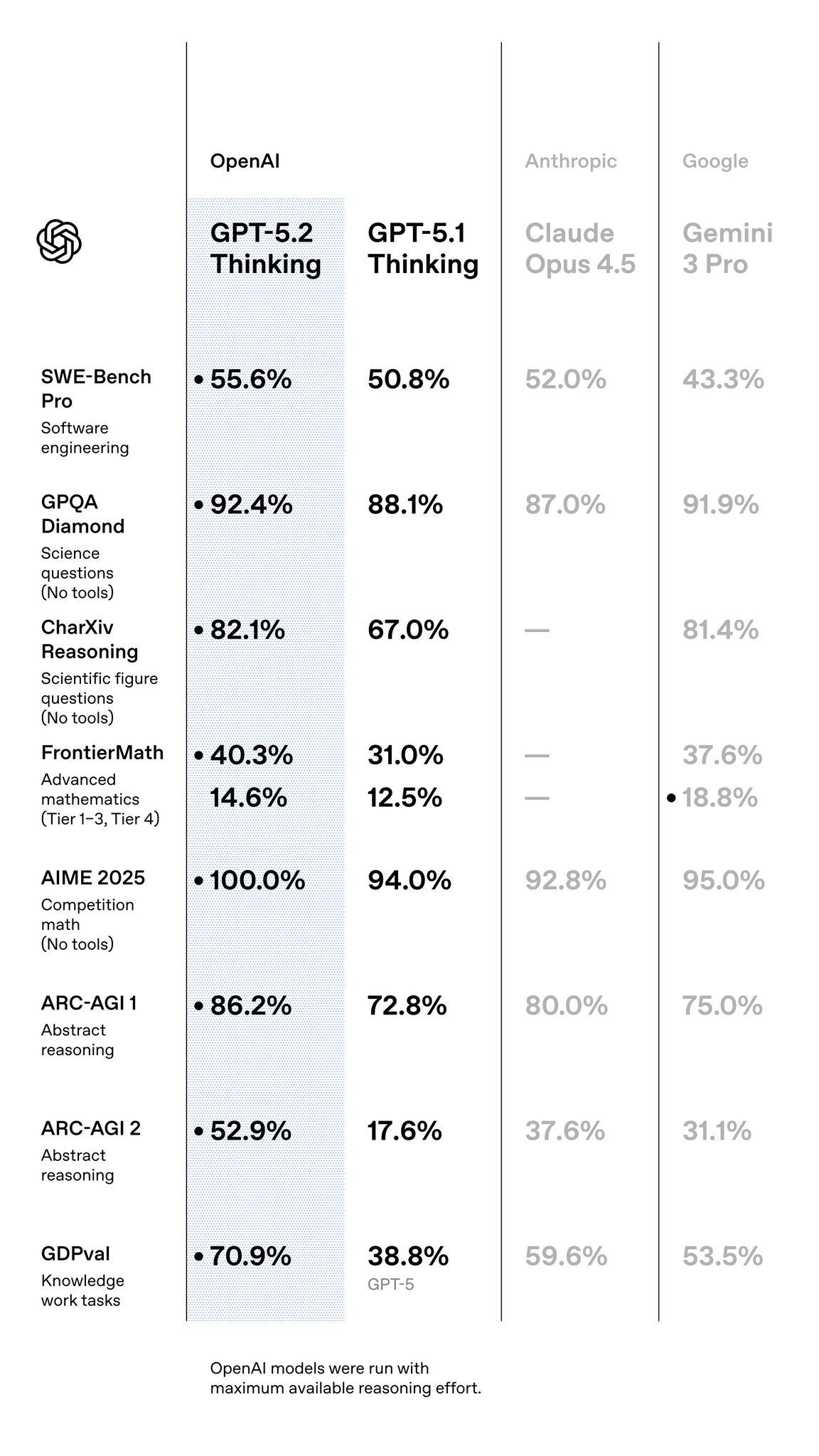

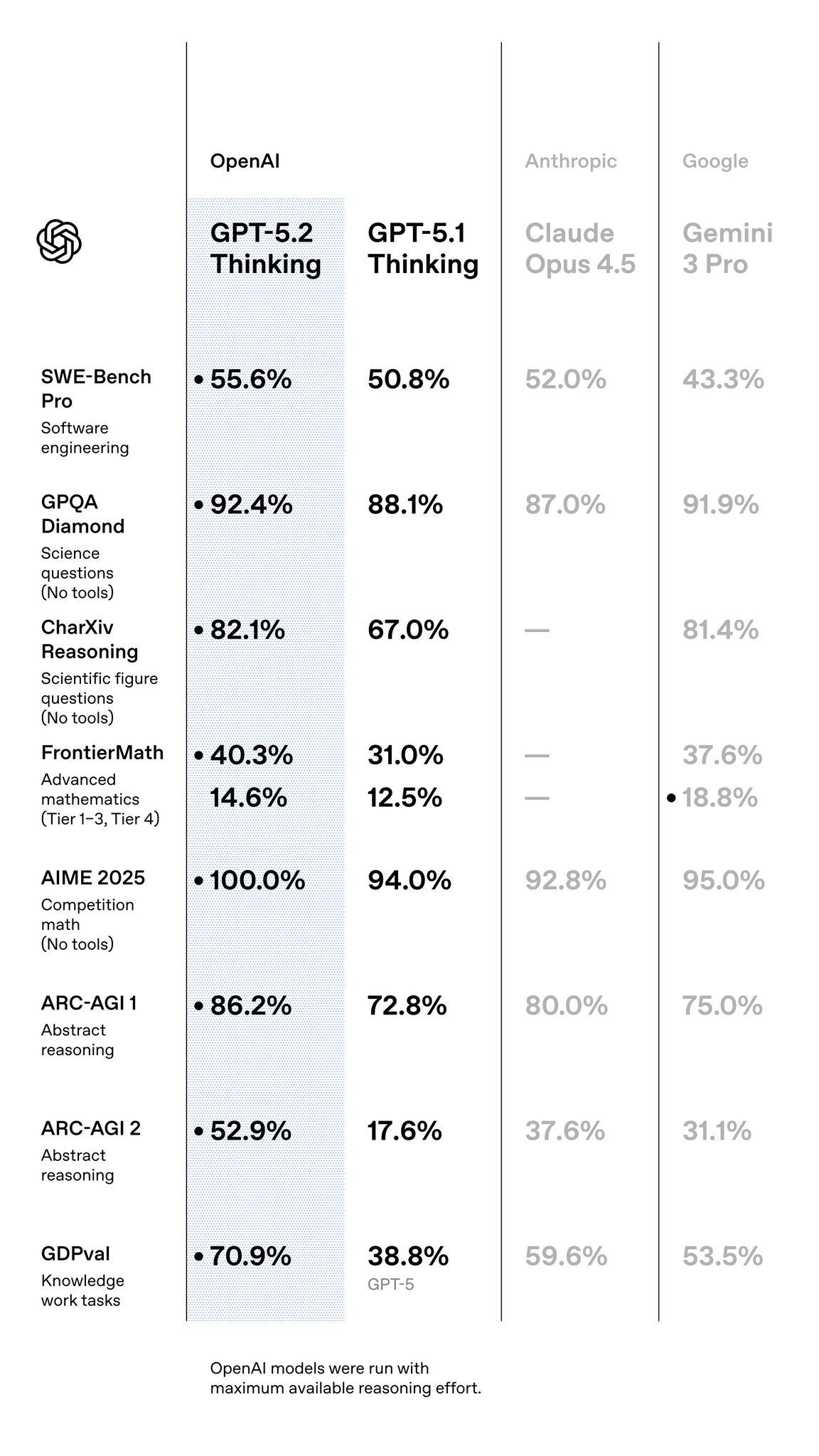

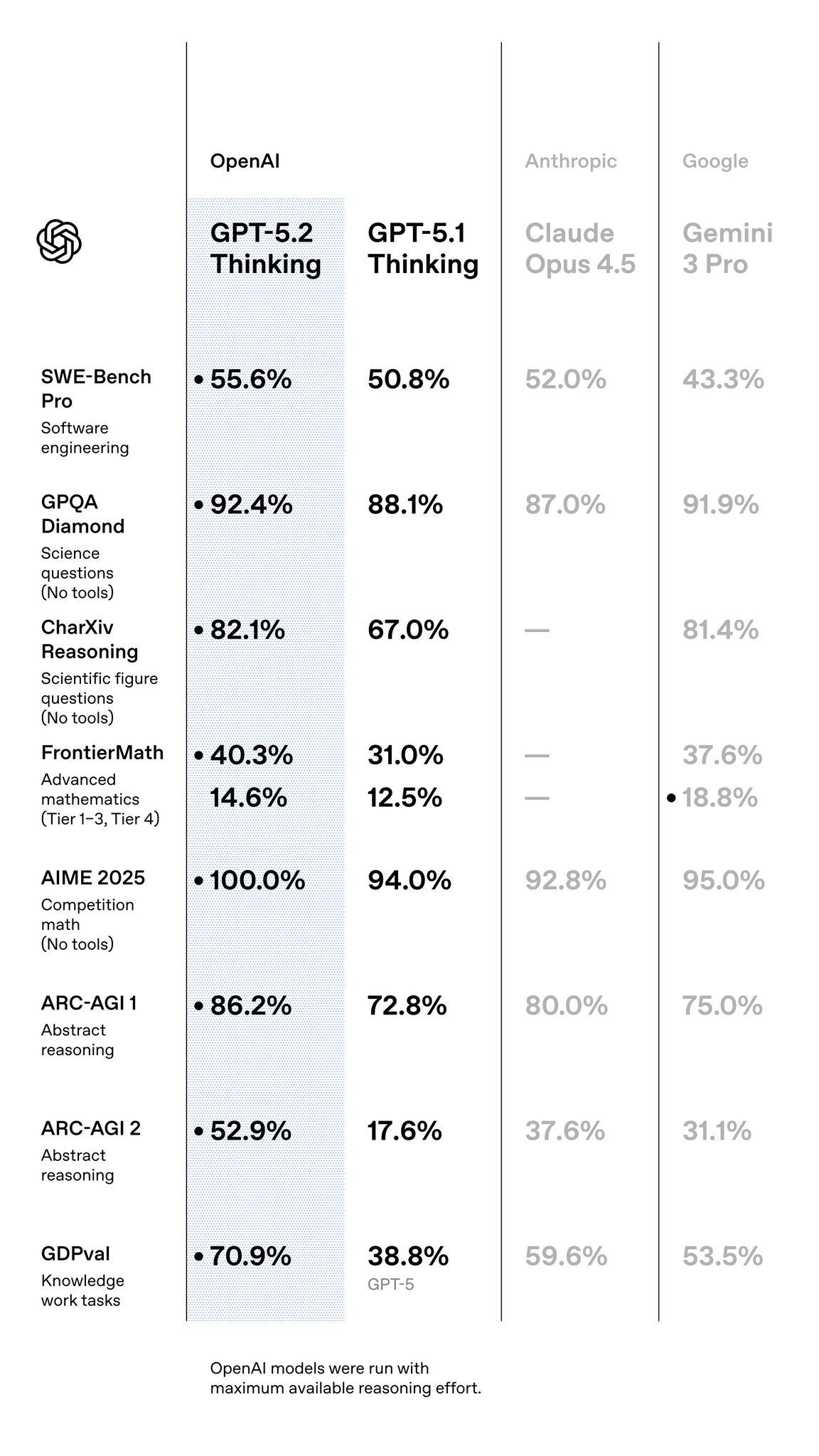

Our list kicks off with OpenAI's Generative Pre-trained Transformer (GPT) models, which have consistently exceeded their previous capabilities with each new release. The company has announced its latest flagship model, GPT-5.2, following closely on the heels of GPT-5.1. The new standard features a significantly expanded context window of 400K tokens (up from 128K in GPT-4) and achieves a perfect 100% score on the AIME 2025 math benchmark. Crucially for enterprise reliability, the hallucination rate has been reduced to 6.2%—an approximately 40% reduction from earlier generations.

OpenAI has also made a move into the open-source community with its new "open-weight" models, GPT-oss-120b and GPT-oss-20b. These are released under the Apache 2.0 license, providing strong real-world performance at a lower cost. Optimized for efficient deployment, they can even run on consumer hardware and are particularly effective for agentic workflows, tool use, and few-shot function calling.

With the release of GPT-5.2, older models like GPT-4o, GPT-4, and GPT-3.5 are being deprecated. While GPT-4o was a notable step toward more natural human-computer interaction with its multimodal capabilities, it is now largely superseded. Similarly, the foundational GPT-4 and GPT-3.5 models are considered less capable than the newer GPT-5.2, which is less prone to reasoning errors and hallucinations. Users who built workflows around older models like o3 and o1 may experience frustration as OpenAI consolidates its offerings.

Despite its advanced conversational and reasoning capabilities, GPT remains a proprietary model. OpenAI keeps the training data and parameters confidential, and full access often requires a commercial license or subscription. We recommend this model for businesses seeking an LLM that excels in multi-step reasoning, conversational dialogue, and real-time interactions, particularly those with a flexible budget.

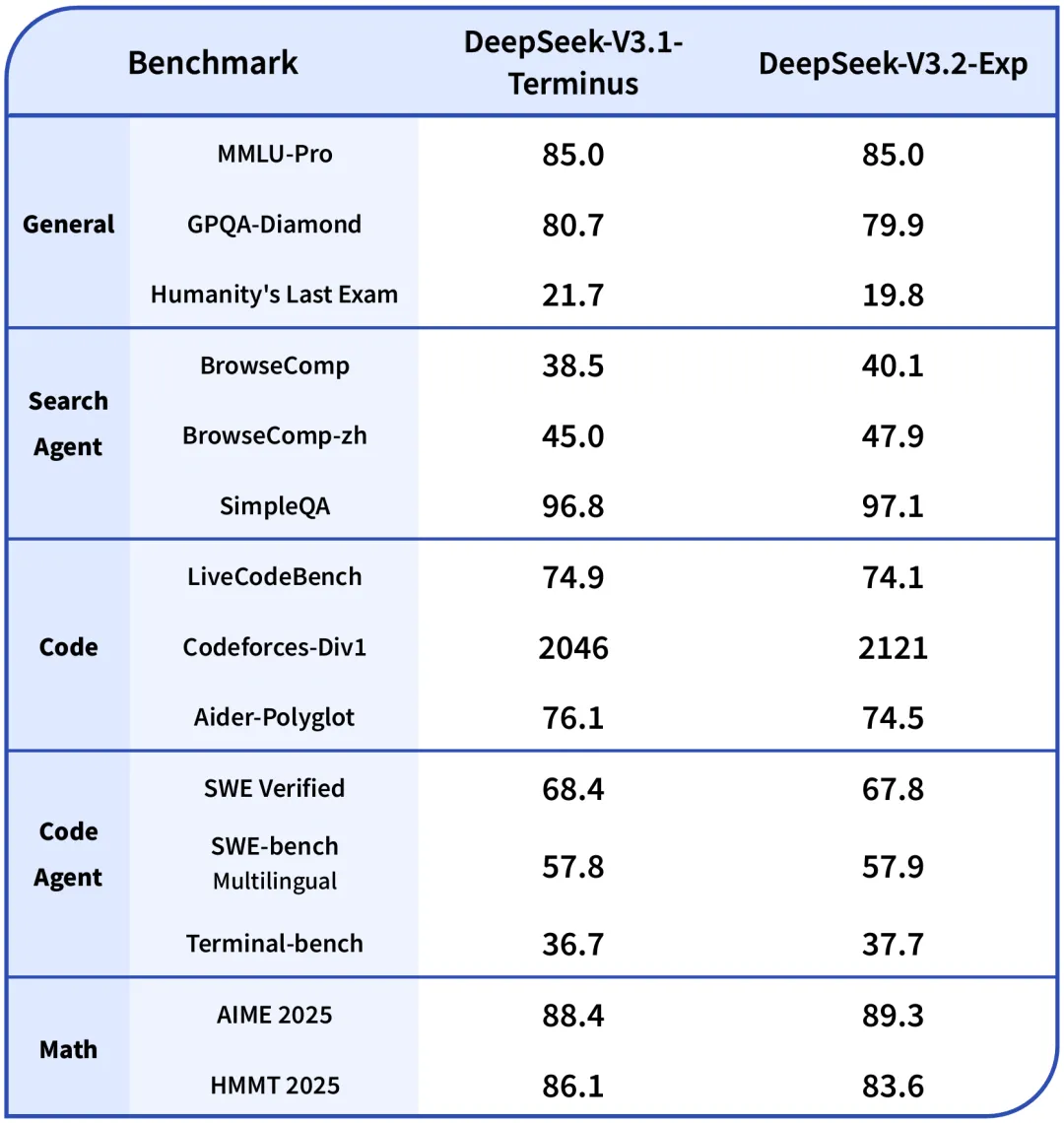

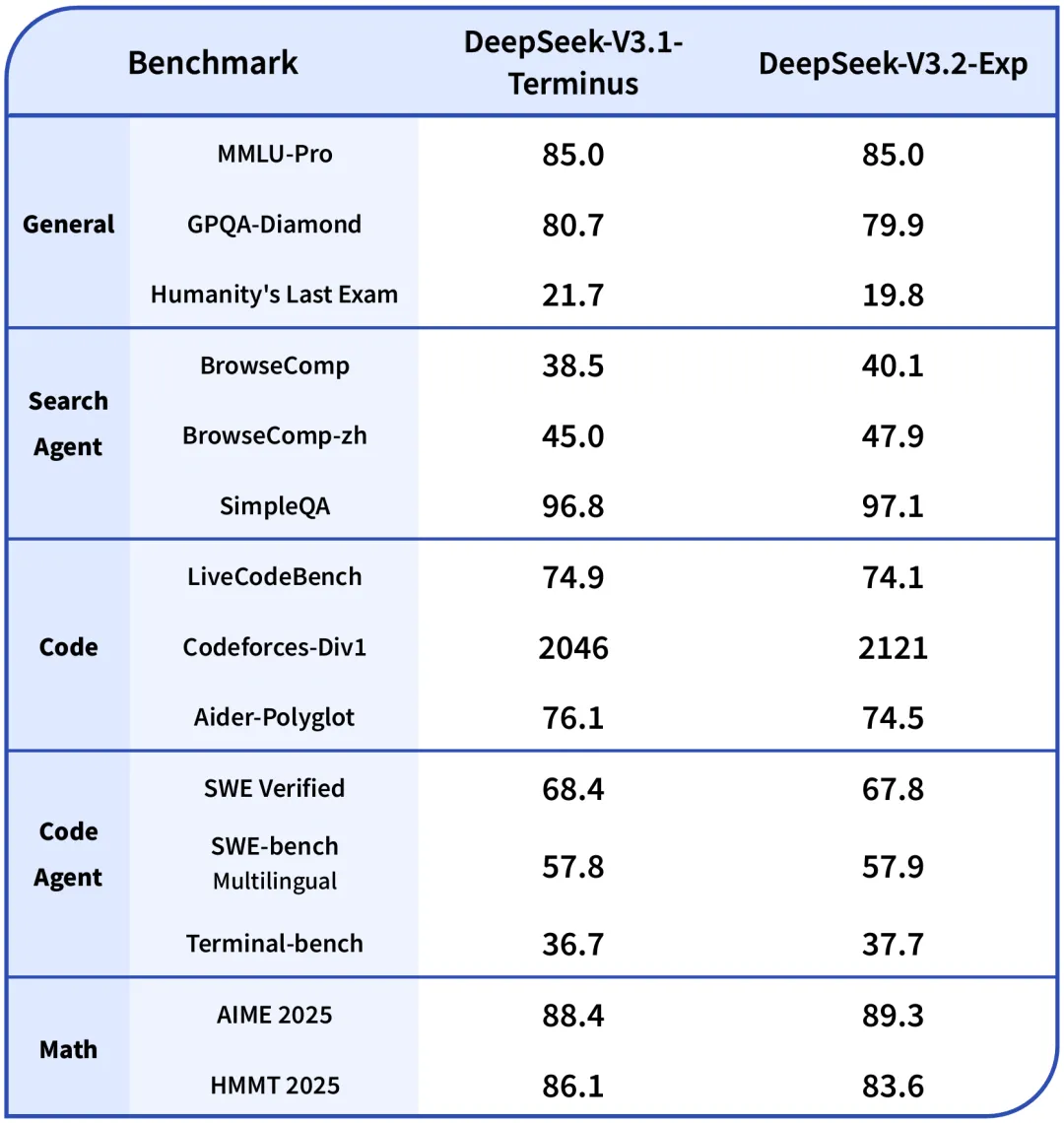

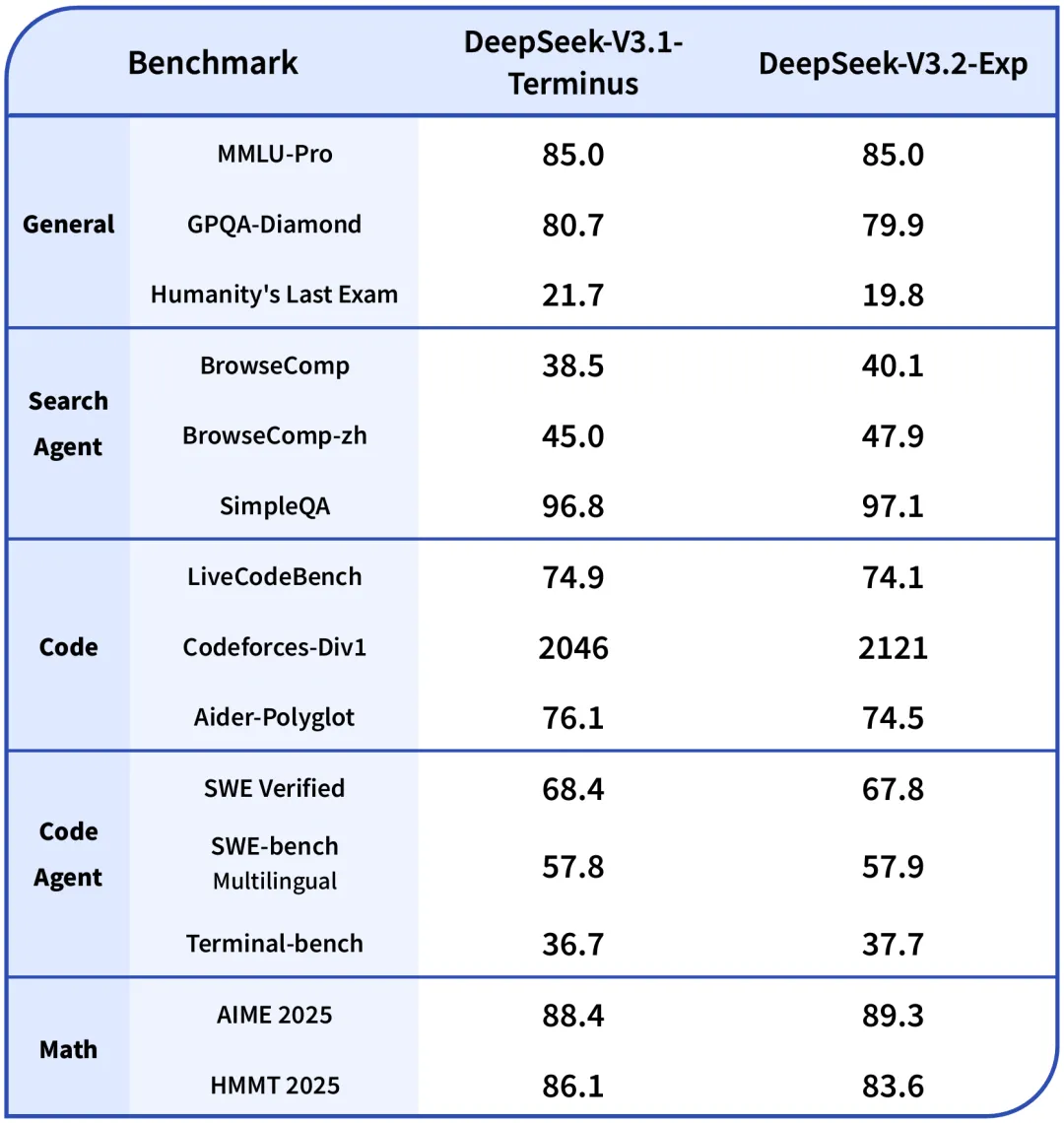

DeepSeek, a Chinese AI company, has continued to push the boundaries of AI innovation with a focus on both specialized and versatile models. As of late 2024 and mid-2025, DeepSeek has been actively releasing and updating its models, including the DeepSeek-V3.2-Exp and the DeepSeek-R1 series.

This model introduces 'Fine-Grained Sparse Attention,' a first-of-its-kind architecture that improves computational efficiency by 50%. For enterprises focused on ROI, DeepSeek offers an aggressive pricing structure, with input costs as low as $0.07/million tokens (with cache hits). It is also the first model to integrate 'thinking' directly into tool-use capabilities, bridging the gap between reasoning and action.

For advanced reasoning, the DeepSeek-R1 series was introduced, which includes models like R1-Zero and R1. The R1 series is specifically designed for high-level problem-solving in areas such as financial analysis, complex mathematics, and automated theorem proving.

DeepSeek also released the DeepSeek-Prover-V2, an open-source model tailored for formal theorem proving in Lean 4. To make these powerful capabilities more accessible, DeepSeek has also developed the DeepSeek-R1-Distill series, which are smaller, more efficient models that have been "distilled" from the larger R1 model. These distilled models, based on architectures like Qwen and Llama, are perfect for production environments where computational efficiency is a priority.

he latest flagship models are Grok 4

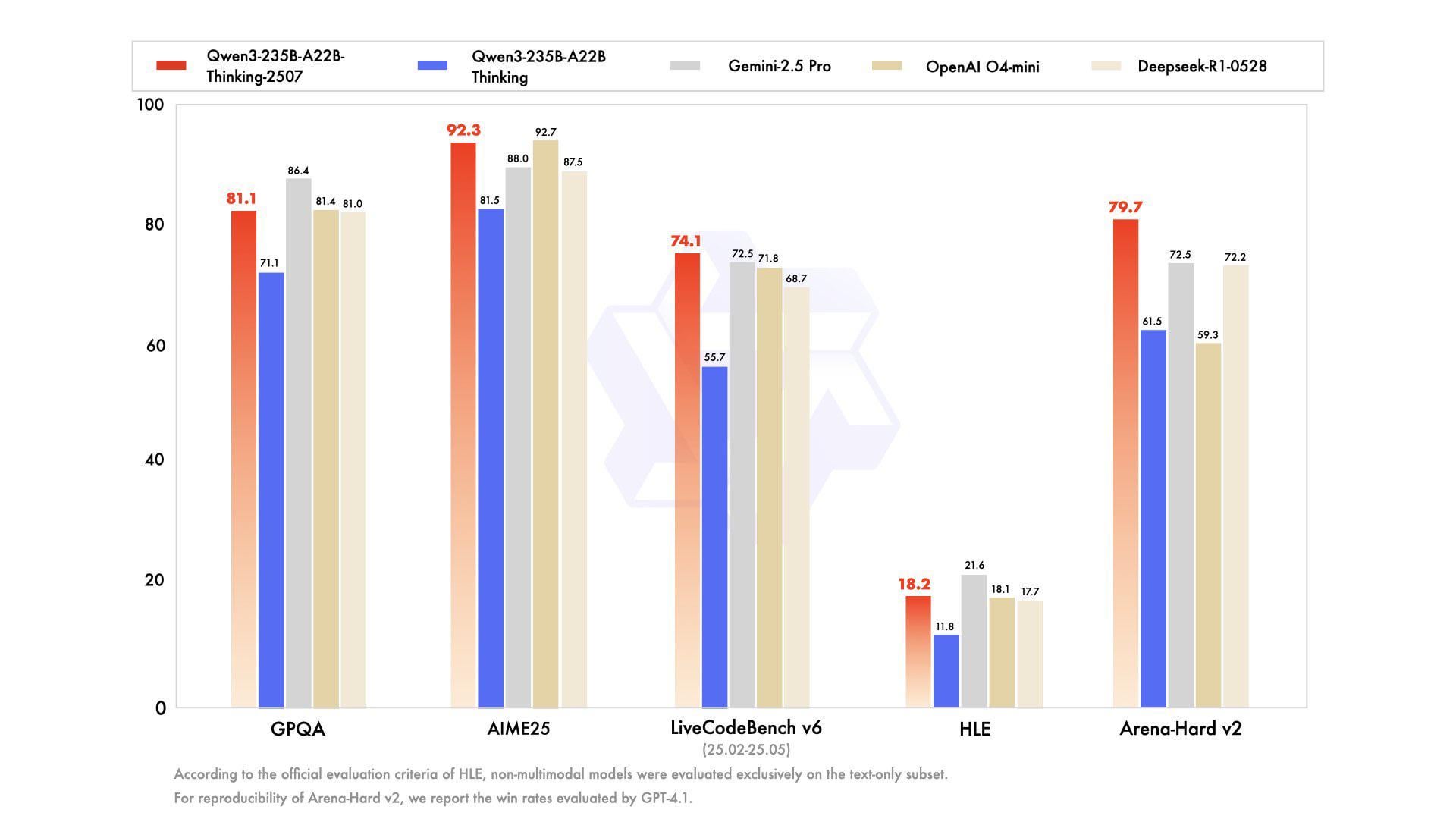

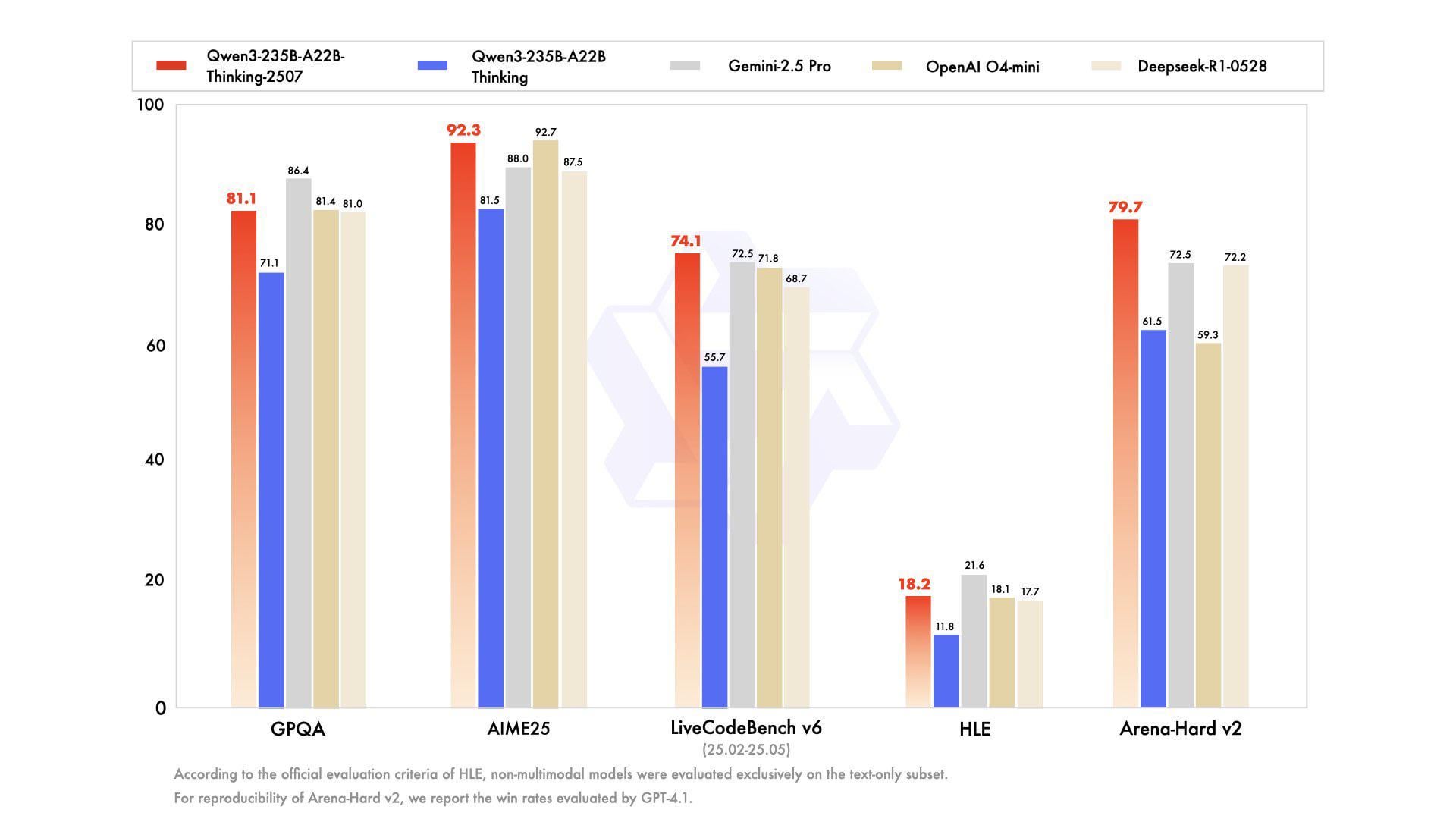

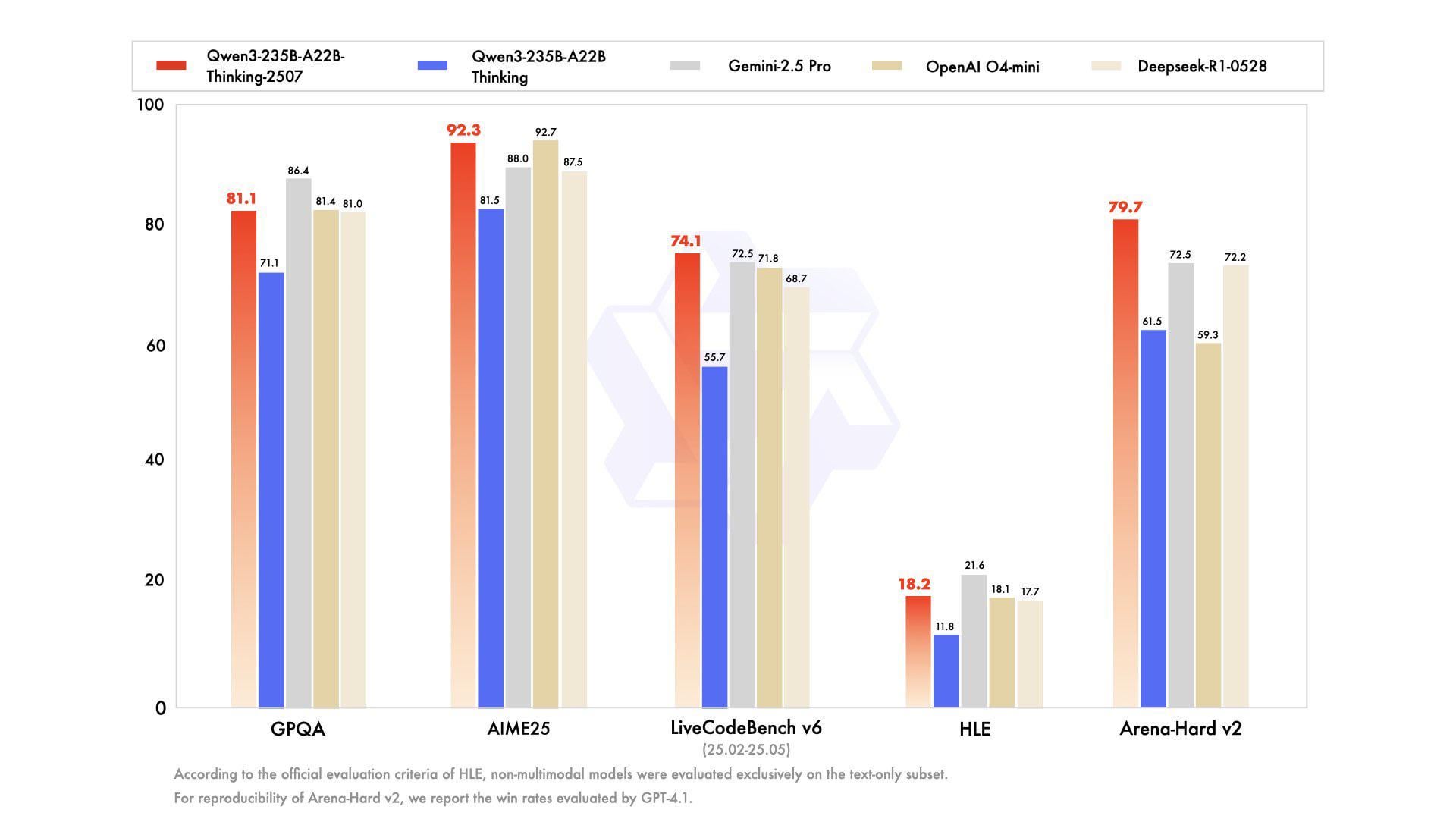

Alibaba has been actively advancing its language model lineup, with the latest major releases centered around the Qwen3 series. These hybrid Mixture-of-Experts (MoE) models reportedly meet or beat GPT-4o and DeepSeek-V3 on most public benchmarks while using far less compute.

Alibaba’s Qwen3-Next and the massive Qwen2.5-Max have redefined the open-weights landscape. With a parameter scale exceeding 1 trillion via MoE architecture, Qwen now supports 119 languages and achieves 92.3% accuracy on AIME25. It is particularly strong in real-world coding, scoring 74.1% on LiveCodeBench v6. Alibaba reports that these models now outperform GPT-4o and Llama-3.1-405B on key benchmarks, making them a heavy hitter for global, multilingual deployments.

The models in the Qwen family, spanning from 4 billion to 235 billion parameters, are open-sourced under the Apache 2.0 license and available through multiple platforms including Alibaba Cloud API, Hugging Face, and ModelScope. The Qwen3 series also includes traditional dense models like the Qwen3-32B and Qwen3-4B, which are highly flexible and can be deployed in various settings. For specialized tasks, there are models like Qwen3-Coder for software engineering, Qwen-VL for vision-language applications, and Qwen-Audio for audio processing.

For businesses and developers, the Qwen family has gained significant traction, with adoption by over 90,000 enterprises across consumer electronics, gaming, and other sectors.

Grok is the generative AI chatbot from xAI, integrated with the social media platform X to offer real-time information and a witty conversational experience. The Grok family of models is designed as a tiered lineup, with each model optimized for a different purpose.

Released in November 2025, Grok 4.1 has taken the lead in pure reasoning. It currently holds the #1 position on the LMArena Elo ranking (1483 Elo) and EQ-Bench. Most notably for business use, the hallucination rate has dropped from ~12% in Grok 4 to just over 4% in Grok 4.1—a 65% reduction. In blind A/B tests, users preferred the 4.1 model nearly 65% of the time over the previous production model.

For developers, Grok Code Fast 1 is a specialized, cost-effective model built for "agentic coding," excelling at automating software development workflows, debugging, and generating code.

These recent models build on the foundation laid by their predecessors. Grok 3 introduced advanced reasoning capabilities with a "Think" mode for step-by-step problem-solving and a "DeepSearch" function for in-depth, real-time research. Grok 2 was the first to introduce multimodality, including image understanding and text-to-image generation.

Given this diverse lineup, Grok is recommended for a range of applications. Grok 4 is ideal for heavy research, data analysis, and expert-level problem-solving. Grok Code Fast 1 is the go-to for software development where speed and cost are a priority. For a balance of speed and quality, the Grok 3 models are well-suited for advanced problem-solving, education, and real-time analysis of current events.

Meta continues to be a leader in the LLM space with its state-of-the-art Llama models, prioritizing an open-source approach. The latest major release is Llama 4, which includes natively multimodal models like Llama 4 Scout and Llama 4 Maverick. These models can process text, images, and short videos, and are built on a Mixture-of-Experts (MoE) architecture for increased efficiency.

Llama 4 Scout is notable for its industry-leading context window of up to 10 million tokens, making it ideal for tasks requiring extensive document analysis. The Llama 3 series, including Llama 3.1 and 3.3, are powerful text-based models optimized for applications in customer service, data analysis, and content creation.

In recent benchmarking, Llama 4 Maverick achieved a 68.47% score on standard benchmarks, placing it ahead of Llama 3.1 405B. In code compilation tasks, it successfully compiled 1007 instances, outperforming previous iterations. Llama 4 Scout’s 10 million token context window (roughly 80 novels) remains an industry leader for massive document analysis.

Unlike closed-source models such as those from OpenAI and Google, Llama’s open-source nature offers developers greater flexibility and control. This allows for fine-tuning the models to specific needs and deploying them on private infrastructure, appealing to businesses seeking scalability and greater security. In terms of performance, Llama 4 Maverick and Scout have been reported to outperform competitors like GPT-4o and Gemini 2.0 Flash across various benchmarks, especially in coding, reasoning, and multilingual capabilities. The open availability and competitive performance of these models foster a large community of researchers and developers.

Anthropic’s latest flagship models, the Claude 4 family (Opus 4 and Sonnet 4.5), build on the foundation of the Claude 3 series by integrating multiple reasoning approaches. A standout feature is the “extended thinking mode,” which leverages a technique of deliberate reasoning or self-reflection loops. This allows the model to iteratively refine its thought process, evaluate various reasoning paths, and optimize for accuracy before finalizing an output, making it suitable for complex, multi-step problem-solving.

Claude models are designed as a versatile family, with each model balancing intelligence, speed, and cost. Claude Opus 4 is the most powerful model, excelling at complex, long-running tasks and agent workflows, with particular strengths in coding and advanced reasoning. Claude Sonnet 4.5 is the newest model, considered the best for real-world agents and coding, and can autonomously sustain complex, multi-step tasks for over 30 hours, while also being an all-around performer optimized for enterprise workloads like data processing.

The lineup now includes Claude Haiku 4.5 (October 15, 2025), completing the 4.5 family. The series is currently unmatched in computer use, scoring 61.4% on OSWorld (previous bests were ~45%).

On the coding front, the 4.5 series achieved 77.2% on SWE-bench Verified, maintaining context over 6+ hour debugging sessions with an 89% success rate. For enterprises, Opus 4.5 offers higher performance while using 48% fewer tokens than previous high-effort models.

While the older Claude 3 models featured a 200K-token context window, the Claude 4 models also offer an impressive 200K token window (with a beta 1 million token context window on Sonnet 4), allowing them to process lengthy documents. The models are multimodal, capable of processing both text and images, and have introduced new features like "computer use," which allows them to navigate a computer's screen with enhanced proficiency. Overall, the Claude family is a strong competitor to models like Google's Gemini and OpenAI's GPT-4, consistently performing well on benchmarks for coding and reasoning.

Mistral AI, a prominent player in the LLM landscape, offers a diverse portfolio of models for both the open-source community and enterprise clients. A key differentiator is its specialized and flexible model approach, providing options tailored for specific use cases.

Mistral released the Mistral 3 family, including Large 3 (675B total parameters, MoE). It delivers 92% of GPT-5.2’s performance at roughly 15% of the price, a massive value proposition for cost-conscious scaling. Mistral also updated its edge capabilities with Ministral 3, capable of running on single GPUs for drones and robotics. Their OCR 3 model now boasts a 74% win rate on complex document parsing, crucial for administrative automation.

For developers, there's Devstral Medium, an "agentic coding" model, and Codestral 2508, optimized for low-latency coding tasks in over 80 languages. Mistral also provides smaller "edge" models like Ministral 3B & 8B for resource-constrained devices, and Voxtral, a family of audio models for speech-to-text.

On the open-source side, Mistral's models are released under the Apache 2.0 license. Mixtral 8x22B is a powerful open-source model using a Mixture-of-Experts (MoE) architecture, known for its performance and computational efficiency. Other open models include Devstral Small 1.1 for coding, Pixtral 12B for multimodal tasks, and Mathstral 7B for solving mathematical problems.

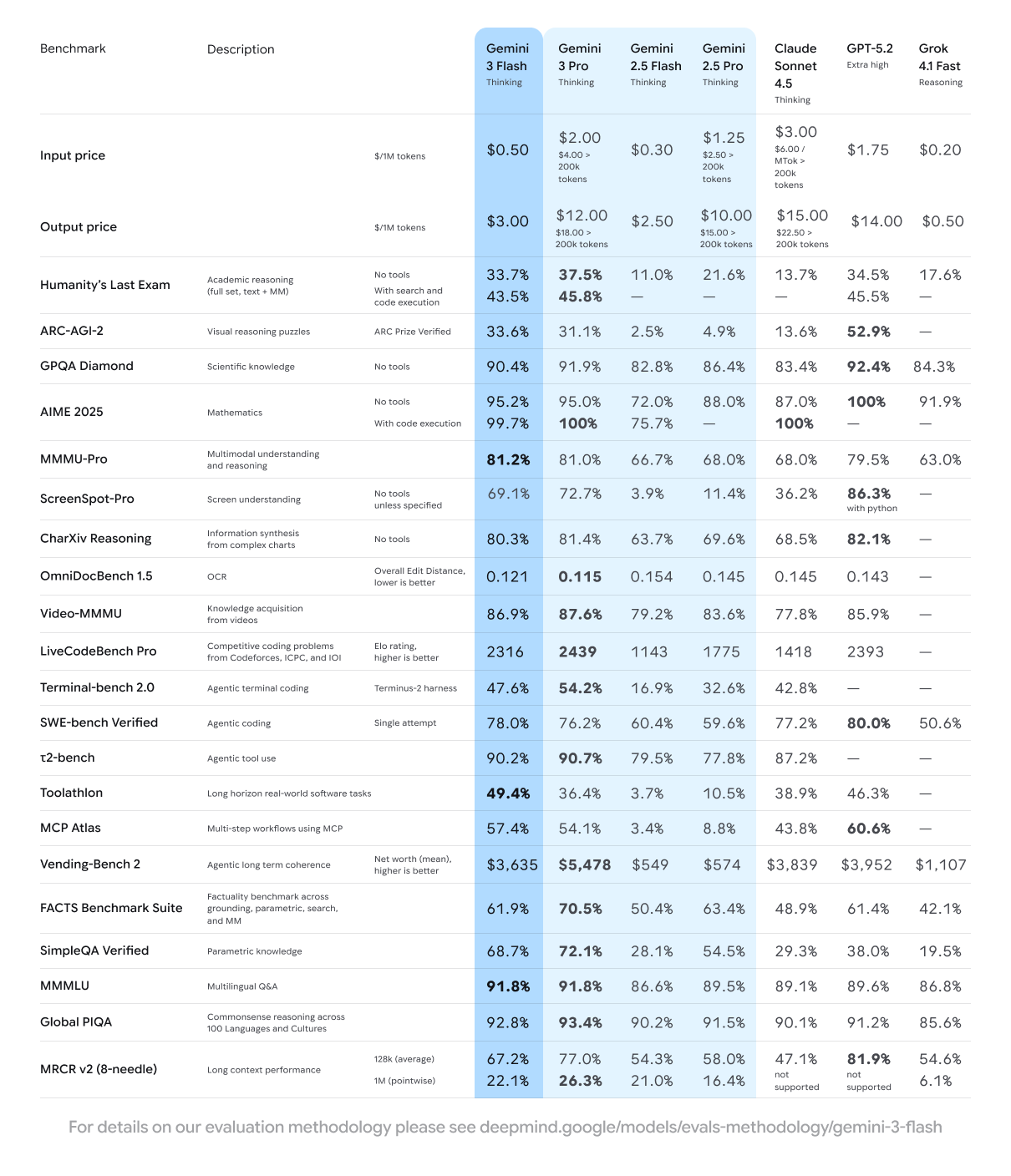

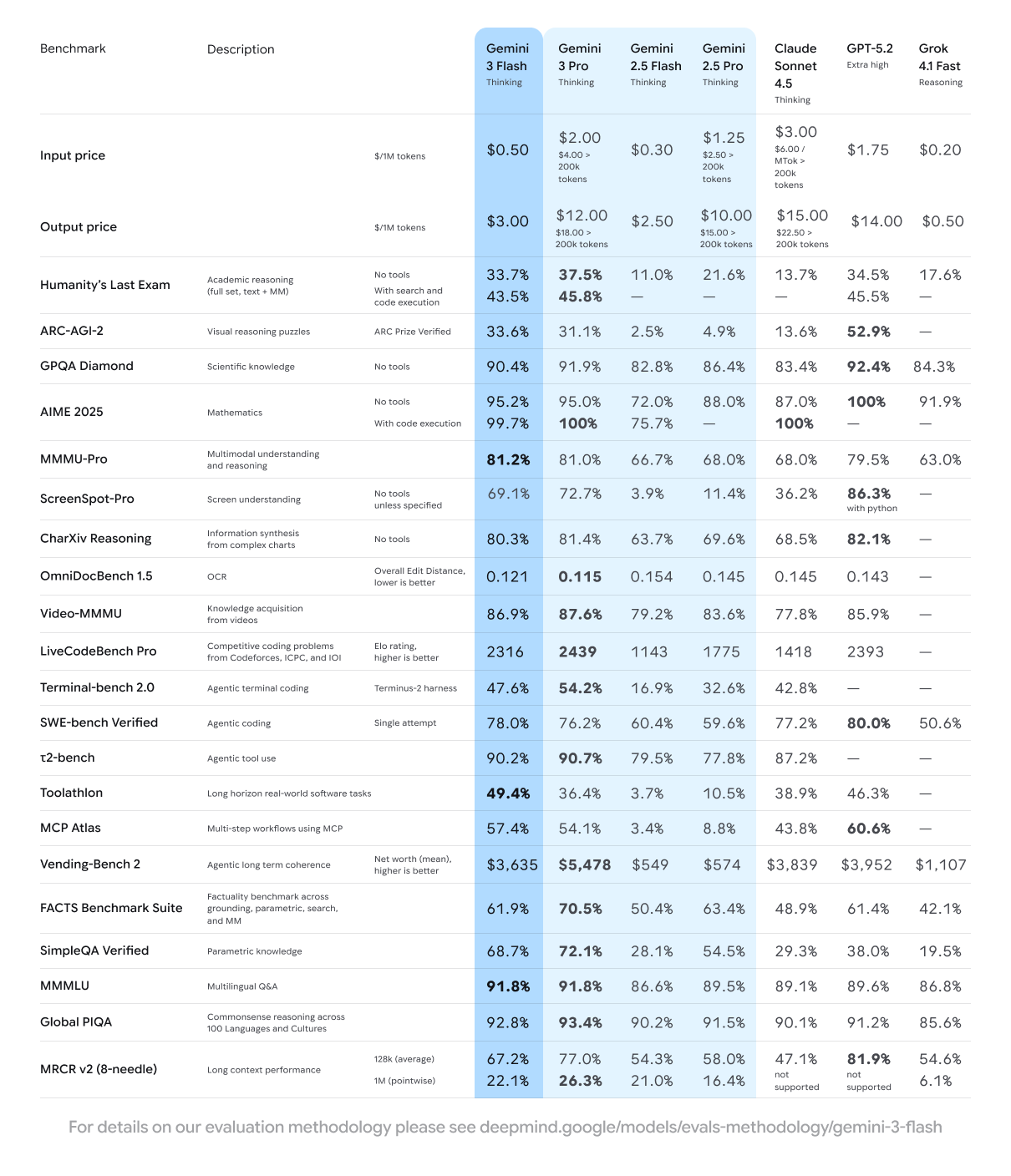

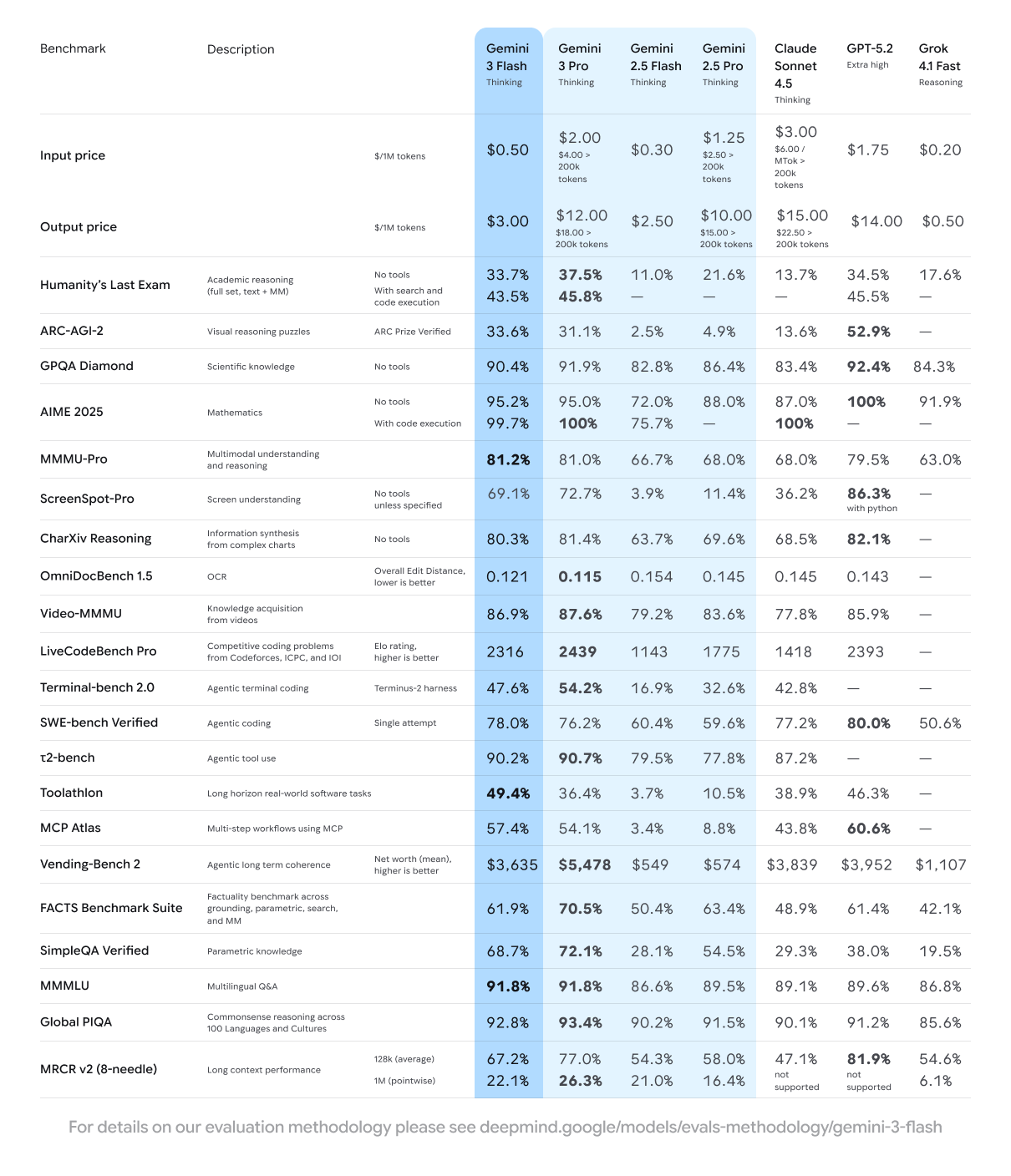

Google’s Gemini 3 Pro and Gemini 3 Flash have shown massive improvements. Gemini 3 Pro features a 1M token context window and achieved a staggering 100% on AIME 2025 (with code execution).

The 'Deep Think' capabilities in Gemini 3 have improved reasoning scores by a factor of 2.5x compared to previous generations (ARC-AGI-2 score of 45.1%). For developers, Gemini 3 Flash is a standout, achieving 78% on SWE-bench Verified, outperforming even the Pro version in specific coding tasks.

For developers and businesses, Google offers several specialized versions of Gemini 3. The Gemini 3 Flash and Flash-Lite models are optimized for high-speed, cost-efficient, and latency-sensitive tasks like classification and translation. Google has also introduced specialized models, including Gemini 3 Flash Image, internally called "Nano Banana" for advanced image editing, and the state-of-the-art video generation model, Veo 3. Veo 3 can create high-fidelity, short videos from text or images and is integrated into the Gemini app.

While Gemini is a proprietary, closed-source model, Google also provides the Gemma family of open-source models, built from the same research. Gemma 3 supports a context window of up to 128,000 tokens and is available in various parameter sizes, making it an ideal, flexible alternative for developers, academics, and startups who need to fine-tune and deploy models locally with greater control.

Given that Gemini is a proprietary model, companies handling sensitive or confidential data must ensure vendor compliance with data privacy and security standards such as GDPR and HIPAA. This due diligence is crucial to mitigate security concerns related to sending data to external servers.

Cohere’s Command family of models targets enterprise use cases. The flagship Command A model features a 256,000-token context window and requires only two GPUs for private deployment, making it more hardware-efficient than competitors like GPT-4o. Human evaluations suggest Command A matches or outperforms larger models on business, STEM, and coding tasks. Cohere has also released specialized models: Command A Vision for image and document analysis, Command A Reasoning for complex problem-solving, and Command A Translate, which supports 23 languages and outperforms competitor translation services.

These models are built for retrieval-augmented generation (RAG), enabling them to access and cite internal company documents for accurate responses. Cohere’s focus on multilingualism, particularly for languages often underserved, is a key differentiator. The company’s solutions also offer secure, on-premise deployment, which is critical for sectors handling sensitive data like finance and healthcare. Cohere's strategy focuses on delivering specific, efficient tools for business workflows rather than topping general-purpose benchmarks.

Recent updates emphasize hardware efficiency; Command A now functions efficiently on just 2 GPUs (H100/A100), whereas similar models often require up to 32. It also generates tokens at 156 tokens/second—roughly 1.75x faster than GPT-4o—making it the ideal choice for low-latency, secure enterprise deployments.

If we had to choose one word to describe the rapid evolution of AI today, it would probably be something along the lines of explosive. As predicted by the Market Research Future report, the large language model (LLM) market in North America alone is expected to reach $105.5 billion by 2030. The exponential growth of AI tools combined with access to massive troves of text data has opened gates for better and more advanced content generation than we had ever hoped. Yet, such rapid expansion also makes it harder than ever to navigate and select the right tools among the diverse LLM models available.

The goal of this post is to keep you, the AI enthusiast and professional, up-to-date with current trends and essential innovations in the field. Below, we highlighted the top 9 LLMs that we think are currently making waves in the industry, each with distinct capabilities and specialized strengths, excelling in areas such as natural language processing, code synthesis, few-shot learning, or scalability. While we believe there is no one-size-fits-all LLM for every use case, we hope that this list can help you identify the most current and well-suited LLM model that meets your business’s unique requirements.

Our list kicks off with OpenAI's Generative Pre-trained Transformer (GPT) models, which have consistently exceeded their previous capabilities with each new release. The company has announced its latest flagship model, GPT-5.2, following closely on the heels of GPT-5.1. The new standard features a significantly expanded context window of 400K tokens (up from 128K in GPT-4) and achieves a perfect 100% score on the AIME 2025 math benchmark. Crucially for enterprise reliability, the hallucination rate has been reduced to 6.2%—an approximately 40% reduction from earlier generations.

OpenAI has also made a move into the open-source community with its new "open-weight" models, GPT-oss-120b and GPT-oss-20b. These are released under the Apache 2.0 license, providing strong real-world performance at a lower cost. Optimized for efficient deployment, they can even run on consumer hardware and are particularly effective for agentic workflows, tool use, and few-shot function calling.

With the release of GPT-5.2, older models like GPT-4o, GPT-4, and GPT-3.5 are being deprecated. While GPT-4o was a notable step toward more natural human-computer interaction with its multimodal capabilities, it is now largely superseded. Similarly, the foundational GPT-4 and GPT-3.5 models are considered less capable than the newer GPT-5.2, which is less prone to reasoning errors and hallucinations. Users who built workflows around older models like o3 and o1 may experience frustration as OpenAI consolidates its offerings.

Despite its advanced conversational and reasoning capabilities, GPT remains a proprietary model. OpenAI keeps the training data and parameters confidential, and full access often requires a commercial license or subscription. We recommend this model for businesses seeking an LLM that excels in multi-step reasoning, conversational dialogue, and real-time interactions, particularly those with a flexible budget.

DeepSeek, a Chinese AI company, has continued to push the boundaries of AI innovation with a focus on both specialized and versatile models. As of late 2024 and mid-2025, DeepSeek has been actively releasing and updating its models, including the DeepSeek-V3.2-Exp and the DeepSeek-R1 series.

This model introduces 'Fine-Grained Sparse Attention,' a first-of-its-kind architecture that improves computational efficiency by 50%. For enterprises focused on ROI, DeepSeek offers an aggressive pricing structure, with input costs as low as $0.07/million tokens (with cache hits). It is also the first model to integrate 'thinking' directly into tool-use capabilities, bridging the gap between reasoning and action.

For advanced reasoning, the DeepSeek-R1 series was introduced, which includes models like R1-Zero and R1. The R1 series is specifically designed for high-level problem-solving in areas such as financial analysis, complex mathematics, and automated theorem proving.

DeepSeek also released the DeepSeek-Prover-V2, an open-source model tailored for formal theorem proving in Lean 4. To make these powerful capabilities more accessible, DeepSeek has also developed the DeepSeek-R1-Distill series, which are smaller, more efficient models that have been "distilled" from the larger R1 model. These distilled models, based on architectures like Qwen and Llama, are perfect for production environments where computational efficiency is a priority.

he latest flagship models are Grok 4

Alibaba has been actively advancing its language model lineup, with the latest major releases centered around the Qwen3 series. These hybrid Mixture-of-Experts (MoE) models reportedly meet or beat GPT-4o and DeepSeek-V3 on most public benchmarks while using far less compute.

Alibaba’s Qwen3-Next and the massive Qwen2.5-Max have redefined the open-weights landscape. With a parameter scale exceeding 1 trillion via MoE architecture, Qwen now supports 119 languages and achieves 92.3% accuracy on AIME25. It is particularly strong in real-world coding, scoring 74.1% on LiveCodeBench v6. Alibaba reports that these models now outperform GPT-4o and Llama-3.1-405B on key benchmarks, making them a heavy hitter for global, multilingual deployments.

The models in the Qwen family, spanning from 4 billion to 235 billion parameters, are open-sourced under the Apache 2.0 license and available through multiple platforms including Alibaba Cloud API, Hugging Face, and ModelScope. The Qwen3 series also includes traditional dense models like the Qwen3-32B and Qwen3-4B, which are highly flexible and can be deployed in various settings. For specialized tasks, there are models like Qwen3-Coder for software engineering, Qwen-VL for vision-language applications, and Qwen-Audio for audio processing.

For businesses and developers, the Qwen family has gained significant traction, with adoption by over 90,000 enterprises across consumer electronics, gaming, and other sectors.

Grok is the generative AI chatbot from xAI, integrated with the social media platform X to offer real-time information and a witty conversational experience. The Grok family of models is designed as a tiered lineup, with each model optimized for a different purpose.

Released in November 2025, Grok 4.1 has taken the lead in pure reasoning. It currently holds the #1 position on the LMArena Elo ranking (1483 Elo) and EQ-Bench. Most notably for business use, the hallucination rate has dropped from ~12% in Grok 4 to just over 4% in Grok 4.1—a 65% reduction. In blind A/B tests, users preferred the 4.1 model nearly 65% of the time over the previous production model.

For developers, Grok Code Fast 1 is a specialized, cost-effective model built for "agentic coding," excelling at automating software development workflows, debugging, and generating code.

These recent models build on the foundation laid by their predecessors. Grok 3 introduced advanced reasoning capabilities with a "Think" mode for step-by-step problem-solving and a "DeepSearch" function for in-depth, real-time research. Grok 2 was the first to introduce multimodality, including image understanding and text-to-image generation.

Given this diverse lineup, Grok is recommended for a range of applications. Grok 4 is ideal for heavy research, data analysis, and expert-level problem-solving. Grok Code Fast 1 is the go-to for software development where speed and cost are a priority. For a balance of speed and quality, the Grok 3 models are well-suited for advanced problem-solving, education, and real-time analysis of current events.

Meta continues to be a leader in the LLM space with its state-of-the-art Llama models, prioritizing an open-source approach. The latest major release is Llama 4, which includes natively multimodal models like Llama 4 Scout and Llama 4 Maverick. These models can process text, images, and short videos, and are built on a Mixture-of-Experts (MoE) architecture for increased efficiency.

Llama 4 Scout is notable for its industry-leading context window of up to 10 million tokens, making it ideal for tasks requiring extensive document analysis. The Llama 3 series, including Llama 3.1 and 3.3, are powerful text-based models optimized for applications in customer service, data analysis, and content creation.

In recent benchmarking, Llama 4 Maverick achieved a 68.47% score on standard benchmarks, placing it ahead of Llama 3.1 405B. In code compilation tasks, it successfully compiled 1007 instances, outperforming previous iterations. Llama 4 Scout’s 10 million token context window (roughly 80 novels) remains an industry leader for massive document analysis.

Unlike closed-source models such as those from OpenAI and Google, Llama’s open-source nature offers developers greater flexibility and control. This allows for fine-tuning the models to specific needs and deploying them on private infrastructure, appealing to businesses seeking scalability and greater security. In terms of performance, Llama 4 Maverick and Scout have been reported to outperform competitors like GPT-4o and Gemini 2.0 Flash across various benchmarks, especially in coding, reasoning, and multilingual capabilities. The open availability and competitive performance of these models foster a large community of researchers and developers.

Anthropic’s latest flagship models, the Claude 4 family (Opus 4 and Sonnet 4.5), build on the foundation of the Claude 3 series by integrating multiple reasoning approaches. A standout feature is the “extended thinking mode,” which leverages a technique of deliberate reasoning or self-reflection loops. This allows the model to iteratively refine its thought process, evaluate various reasoning paths, and optimize for accuracy before finalizing an output, making it suitable for complex, multi-step problem-solving.

Claude models are designed as a versatile family, with each model balancing intelligence, speed, and cost. Claude Opus 4 is the most powerful model, excelling at complex, long-running tasks and agent workflows, with particular strengths in coding and advanced reasoning. Claude Sonnet 4.5 is the newest model, considered the best for real-world agents and coding, and can autonomously sustain complex, multi-step tasks for over 30 hours, while also being an all-around performer optimized for enterprise workloads like data processing.

The lineup now includes Claude Haiku 4.5 (October 15, 2025), completing the 4.5 family. The series is currently unmatched in computer use, scoring 61.4% on OSWorld (previous bests were ~45%).

On the coding front, the 4.5 series achieved 77.2% on SWE-bench Verified, maintaining context over 6+ hour debugging sessions with an 89% success rate. For enterprises, Opus 4.5 offers higher performance while using 48% fewer tokens than previous high-effort models.

While the older Claude 3 models featured a 200K-token context window, the Claude 4 models also offer an impressive 200K token window (with a beta 1 million token context window on Sonnet 4), allowing them to process lengthy documents. The models are multimodal, capable of processing both text and images, and have introduced new features like "computer use," which allows them to navigate a computer's screen with enhanced proficiency. Overall, the Claude family is a strong competitor to models like Google's Gemini and OpenAI's GPT-4, consistently performing well on benchmarks for coding and reasoning.

Mistral AI, a prominent player in the LLM landscape, offers a diverse portfolio of models for both the open-source community and enterprise clients. A key differentiator is its specialized and flexible model approach, providing options tailored for specific use cases.

Mistral released the Mistral 3 family, including Large 3 (675B total parameters, MoE). It delivers 92% of GPT-5.2’s performance at roughly 15% of the price, a massive value proposition for cost-conscious scaling. Mistral also updated its edge capabilities with Ministral 3, capable of running on single GPUs for drones and robotics. Their OCR 3 model now boasts a 74% win rate on complex document parsing, crucial for administrative automation.

For developers, there's Devstral Medium, an "agentic coding" model, and Codestral 2508, optimized for low-latency coding tasks in over 80 languages. Mistral also provides smaller "edge" models like Ministral 3B & 8B for resource-constrained devices, and Voxtral, a family of audio models for speech-to-text.

On the open-source side, Mistral's models are released under the Apache 2.0 license. Mixtral 8x22B is a powerful open-source model using a Mixture-of-Experts (MoE) architecture, known for its performance and computational efficiency. Other open models include Devstral Small 1.1 for coding, Pixtral 12B for multimodal tasks, and Mathstral 7B for solving mathematical problems.

Google’s Gemini 3 Pro and Gemini 3 Flash have shown massive improvements. Gemini 3 Pro features a 1M token context window and achieved a staggering 100% on AIME 2025 (with code execution).

The 'Deep Think' capabilities in Gemini 3 have improved reasoning scores by a factor of 2.5x compared to previous generations (ARC-AGI-2 score of 45.1%). For developers, Gemini 3 Flash is a standout, achieving 78% on SWE-bench Verified, outperforming even the Pro version in specific coding tasks.

For developers and businesses, Google offers several specialized versions of Gemini 3. The Gemini 3 Flash and Flash-Lite models are optimized for high-speed, cost-efficient, and latency-sensitive tasks like classification and translation. Google has also introduced specialized models, including Gemini 3 Flash Image, internally called "Nano Banana" for advanced image editing, and the state-of-the-art video generation model, Veo 3. Veo 3 can create high-fidelity, short videos from text or images and is integrated into the Gemini app.

While Gemini is a proprietary, closed-source model, Google also provides the Gemma family of open-source models, built from the same research. Gemma 3 supports a context window of up to 128,000 tokens and is available in various parameter sizes, making it an ideal, flexible alternative for developers, academics, and startups who need to fine-tune and deploy models locally with greater control.

Given that Gemini is a proprietary model, companies handling sensitive or confidential data must ensure vendor compliance with data privacy and security standards such as GDPR and HIPAA. This due diligence is crucial to mitigate security concerns related to sending data to external servers.

Cohere’s Command family of models targets enterprise use cases. The flagship Command A model features a 256,000-token context window and requires only two GPUs for private deployment, making it more hardware-efficient than competitors like GPT-4o. Human evaluations suggest Command A matches or outperforms larger models on business, STEM, and coding tasks. Cohere has also released specialized models: Command A Vision for image and document analysis, Command A Reasoning for complex problem-solving, and Command A Translate, which supports 23 languages and outperforms competitor translation services.

These models are built for retrieval-augmented generation (RAG), enabling them to access and cite internal company documents for accurate responses. Cohere’s focus on multilingualism, particularly for languages often underserved, is a key differentiator. The company’s solutions also offer secure, on-premise deployment, which is critical for sectors handling sensitive data like finance and healthcare. Cohere's strategy focuses on delivering specific, efficient tools for business workflows rather than topping general-purpose benchmarks.

Recent updates emphasize hardware efficiency; Command A now functions efficiently on just 2 GPUs (H100/A100), whereas similar models often require up to 32. It also generates tokens at 156 tokens/second—roughly 1.75x faster than GPT-4o—making it the ideal choice for low-latency, secure enterprise deployments.

If we had to choose one word to describe the rapid evolution of AI today, it would probably be something along the lines of explosive. As predicted by the Market Research Future report, the large language model (LLM) market in North America alone is expected to reach $105.5 billion by 2030. The exponential growth of AI tools combined with access to massive troves of text data has opened gates for better and more advanced content generation than we had ever hoped. Yet, such rapid expansion also makes it harder than ever to navigate and select the right tools among the diverse LLM models available.

The goal of this post is to keep you, the AI enthusiast and professional, up-to-date with current trends and essential innovations in the field. Below, we highlighted the top 9 LLMs that we think are currently making waves in the industry, each with distinct capabilities and specialized strengths, excelling in areas such as natural language processing, code synthesis, few-shot learning, or scalability. While we believe there is no one-size-fits-all LLM for every use case, we hope that this list can help you identify the most current and well-suited LLM model that meets your business’s unique requirements.

Our list kicks off with OpenAI's Generative Pre-trained Transformer (GPT) models, which have consistently exceeded their previous capabilities with each new release. The company has announced its latest flagship model, GPT-5.2, following closely on the heels of GPT-5.1. The new standard features a significantly expanded context window of 400K tokens (up from 128K in GPT-4) and achieves a perfect 100% score on the AIME 2025 math benchmark. Crucially for enterprise reliability, the hallucination rate has been reduced to 6.2%—an approximately 40% reduction from earlier generations.

OpenAI has also made a move into the open-source community with its new "open-weight" models, GPT-oss-120b and GPT-oss-20b. These are released under the Apache 2.0 license, providing strong real-world performance at a lower cost. Optimized for efficient deployment, they can even run on consumer hardware and are particularly effective for agentic workflows, tool use, and few-shot function calling.

With the release of GPT-5.2, older models like GPT-4o, GPT-4, and GPT-3.5 are being deprecated. While GPT-4o was a notable step toward more natural human-computer interaction with its multimodal capabilities, it is now largely superseded. Similarly, the foundational GPT-4 and GPT-3.5 models are considered less capable than the newer GPT-5.2, which is less prone to reasoning errors and hallucinations. Users who built workflows around older models like o3 and o1 may experience frustration as OpenAI consolidates its offerings.

Despite its advanced conversational and reasoning capabilities, GPT remains a proprietary model. OpenAI keeps the training data and parameters confidential, and full access often requires a commercial license or subscription. We recommend this model for businesses seeking an LLM that excels in multi-step reasoning, conversational dialogue, and real-time interactions, particularly those with a flexible budget.

DeepSeek, a Chinese AI company, has continued to push the boundaries of AI innovation with a focus on both specialized and versatile models. As of late 2024 and mid-2025, DeepSeek has been actively releasing and updating its models, including the DeepSeek-V3.2-Exp and the DeepSeek-R1 series.

This model introduces 'Fine-Grained Sparse Attention,' a first-of-its-kind architecture that improves computational efficiency by 50%. For enterprises focused on ROI, DeepSeek offers an aggressive pricing structure, with input costs as low as $0.07/million tokens (with cache hits). It is also the first model to integrate 'thinking' directly into tool-use capabilities, bridging the gap between reasoning and action.

For advanced reasoning, the DeepSeek-R1 series was introduced, which includes models like R1-Zero and R1. The R1 series is specifically designed for high-level problem-solving in areas such as financial analysis, complex mathematics, and automated theorem proving.

DeepSeek also released the DeepSeek-Prover-V2, an open-source model tailored for formal theorem proving in Lean 4. To make these powerful capabilities more accessible, DeepSeek has also developed the DeepSeek-R1-Distill series, which are smaller, more efficient models that have been "distilled" from the larger R1 model. These distilled models, based on architectures like Qwen and Llama, are perfect for production environments where computational efficiency is a priority.

he latest flagship models are Grok 4

Alibaba has been actively advancing its language model lineup, with the latest major releases centered around the Qwen3 series. These hybrid Mixture-of-Experts (MoE) models reportedly meet or beat GPT-4o and DeepSeek-V3 on most public benchmarks while using far less compute.

Alibaba’s Qwen3-Next and the massive Qwen2.5-Max have redefined the open-weights landscape. With a parameter scale exceeding 1 trillion via MoE architecture, Qwen now supports 119 languages and achieves 92.3% accuracy on AIME25. It is particularly strong in real-world coding, scoring 74.1% on LiveCodeBench v6. Alibaba reports that these models now outperform GPT-4o and Llama-3.1-405B on key benchmarks, making them a heavy hitter for global, multilingual deployments.

The models in the Qwen family, spanning from 4 billion to 235 billion parameters, are open-sourced under the Apache 2.0 license and available through multiple platforms including Alibaba Cloud API, Hugging Face, and ModelScope. The Qwen3 series also includes traditional dense models like the Qwen3-32B and Qwen3-4B, which are highly flexible and can be deployed in various settings. For specialized tasks, there are models like Qwen3-Coder for software engineering, Qwen-VL for vision-language applications, and Qwen-Audio for audio processing.

For businesses and developers, the Qwen family has gained significant traction, with adoption by over 90,000 enterprises across consumer electronics, gaming, and other sectors.

Grok is the generative AI chatbot from xAI, integrated with the social media platform X to offer real-time information and a witty conversational experience. The Grok family of models is designed as a tiered lineup, with each model optimized for a different purpose.

Released in November 2025, Grok 4.1 has taken the lead in pure reasoning. It currently holds the #1 position on the LMArena Elo ranking (1483 Elo) and EQ-Bench. Most notably for business use, the hallucination rate has dropped from ~12% in Grok 4 to just over 4% in Grok 4.1—a 65% reduction. In blind A/B tests, users preferred the 4.1 model nearly 65% of the time over the previous production model.

For developers, Grok Code Fast 1 is a specialized, cost-effective model built for "agentic coding," excelling at automating software development workflows, debugging, and generating code.

These recent models build on the foundation laid by their predecessors. Grok 3 introduced advanced reasoning capabilities with a "Think" mode for step-by-step problem-solving and a "DeepSearch" function for in-depth, real-time research. Grok 2 was the first to introduce multimodality, including image understanding and text-to-image generation.

Given this diverse lineup, Grok is recommended for a range of applications. Grok 4 is ideal for heavy research, data analysis, and expert-level problem-solving. Grok Code Fast 1 is the go-to for software development where speed and cost are a priority. For a balance of speed and quality, the Grok 3 models are well-suited for advanced problem-solving, education, and real-time analysis of current events.

Meta continues to be a leader in the LLM space with its state-of-the-art Llama models, prioritizing an open-source approach. The latest major release is Llama 4, which includes natively multimodal models like Llama 4 Scout and Llama 4 Maverick. These models can process text, images, and short videos, and are built on a Mixture-of-Experts (MoE) architecture for increased efficiency.

Llama 4 Scout is notable for its industry-leading context window of up to 10 million tokens, making it ideal for tasks requiring extensive document analysis. The Llama 3 series, including Llama 3.1 and 3.3, are powerful text-based models optimized for applications in customer service, data analysis, and content creation.

In recent benchmarking, Llama 4 Maverick achieved a 68.47% score on standard benchmarks, placing it ahead of Llama 3.1 405B. In code compilation tasks, it successfully compiled 1007 instances, outperforming previous iterations. Llama 4 Scout’s 10 million token context window (roughly 80 novels) remains an industry leader for massive document analysis.

Unlike closed-source models such as those from OpenAI and Google, Llama’s open-source nature offers developers greater flexibility and control. This allows for fine-tuning the models to specific needs and deploying them on private infrastructure, appealing to businesses seeking scalability and greater security. In terms of performance, Llama 4 Maverick and Scout have been reported to outperform competitors like GPT-4o and Gemini 2.0 Flash across various benchmarks, especially in coding, reasoning, and multilingual capabilities. The open availability and competitive performance of these models foster a large community of researchers and developers.

Anthropic’s latest flagship models, the Claude 4 family (Opus 4 and Sonnet 4.5), build on the foundation of the Claude 3 series by integrating multiple reasoning approaches. A standout feature is the “extended thinking mode,” which leverages a technique of deliberate reasoning or self-reflection loops. This allows the model to iteratively refine its thought process, evaluate various reasoning paths, and optimize for accuracy before finalizing an output, making it suitable for complex, multi-step problem-solving.

Claude models are designed as a versatile family, with each model balancing intelligence, speed, and cost. Claude Opus 4 is the most powerful model, excelling at complex, long-running tasks and agent workflows, with particular strengths in coding and advanced reasoning. Claude Sonnet 4.5 is the newest model, considered the best for real-world agents and coding, and can autonomously sustain complex, multi-step tasks for over 30 hours, while also being an all-around performer optimized for enterprise workloads like data processing.

The lineup now includes Claude Haiku 4.5 (October 15, 2025), completing the 4.5 family. The series is currently unmatched in computer use, scoring 61.4% on OSWorld (previous bests were ~45%).

On the coding front, the 4.5 series achieved 77.2% on SWE-bench Verified, maintaining context over 6+ hour debugging sessions with an 89% success rate. For enterprises, Opus 4.5 offers higher performance while using 48% fewer tokens than previous high-effort models.

While the older Claude 3 models featured a 200K-token context window, the Claude 4 models also offer an impressive 200K token window (with a beta 1 million token context window on Sonnet 4), allowing them to process lengthy documents. The models are multimodal, capable of processing both text and images, and have introduced new features like "computer use," which allows them to navigate a computer's screen with enhanced proficiency. Overall, the Claude family is a strong competitor to models like Google's Gemini and OpenAI's GPT-4, consistently performing well on benchmarks for coding and reasoning.

Mistral AI, a prominent player in the LLM landscape, offers a diverse portfolio of models for both the open-source community and enterprise clients. A key differentiator is its specialized and flexible model approach, providing options tailored for specific use cases.

Mistral released the Mistral 3 family, including Large 3 (675B total parameters, MoE). It delivers 92% of GPT-5.2’s performance at roughly 15% of the price, a massive value proposition for cost-conscious scaling. Mistral also updated its edge capabilities with Ministral 3, capable of running on single GPUs for drones and robotics. Their OCR 3 model now boasts a 74% win rate on complex document parsing, crucial for administrative automation.

For developers, there's Devstral Medium, an "agentic coding" model, and Codestral 2508, optimized for low-latency coding tasks in over 80 languages. Mistral also provides smaller "edge" models like Ministral 3B & 8B for resource-constrained devices, and Voxtral, a family of audio models for speech-to-text.

On the open-source side, Mistral's models are released under the Apache 2.0 license. Mixtral 8x22B is a powerful open-source model using a Mixture-of-Experts (MoE) architecture, known for its performance and computational efficiency. Other open models include Devstral Small 1.1 for coding, Pixtral 12B for multimodal tasks, and Mathstral 7B for solving mathematical problems.

Google’s Gemini 3 Pro and Gemini 3 Flash have shown massive improvements. Gemini 3 Pro features a 1M token context window and achieved a staggering 100% on AIME 2025 (with code execution).

The 'Deep Think' capabilities in Gemini 3 have improved reasoning scores by a factor of 2.5x compared to previous generations (ARC-AGI-2 score of 45.1%). For developers, Gemini 3 Flash is a standout, achieving 78% on SWE-bench Verified, outperforming even the Pro version in specific coding tasks.

For developers and businesses, Google offers several specialized versions of Gemini 3. The Gemini 3 Flash and Flash-Lite models are optimized for high-speed, cost-efficient, and latency-sensitive tasks like classification and translation. Google has also introduced specialized models, including Gemini 3 Flash Image, internally called "Nano Banana" for advanced image editing, and the state-of-the-art video generation model, Veo 3. Veo 3 can create high-fidelity, short videos from text or images and is integrated into the Gemini app.

While Gemini is a proprietary, closed-source model, Google also provides the Gemma family of open-source models, built from the same research. Gemma 3 supports a context window of up to 128,000 tokens and is available in various parameter sizes, making it an ideal, flexible alternative for developers, academics, and startups who need to fine-tune and deploy models locally with greater control.

Given that Gemini is a proprietary model, companies handling sensitive or confidential data must ensure vendor compliance with data privacy and security standards such as GDPR and HIPAA. This due diligence is crucial to mitigate security concerns related to sending data to external servers.

Cohere’s Command family of models targets enterprise use cases. The flagship Command A model features a 256,000-token context window and requires only two GPUs for private deployment, making it more hardware-efficient than competitors like GPT-4o. Human evaluations suggest Command A matches or outperforms larger models on business, STEM, and coding tasks. Cohere has also released specialized models: Command A Vision for image and document analysis, Command A Reasoning for complex problem-solving, and Command A Translate, which supports 23 languages and outperforms competitor translation services.

These models are built for retrieval-augmented generation (RAG), enabling them to access and cite internal company documents for accurate responses. Cohere’s focus on multilingualism, particularly for languages often underserved, is a key differentiator. The company’s solutions also offer secure, on-premise deployment, which is critical for sectors handling sensitive data like finance and healthcare. Cohere's strategy focuses on delivering specific, efficient tools for business workflows rather than topping general-purpose benchmarks.

Recent updates emphasize hardware efficiency; Command A now functions efficiently on just 2 GPUs (H100/A100), whereas similar models often require up to 32. It also generates tokens at 156 tokens/second—roughly 1.75x faster than GPT-4o—making it the ideal choice for low-latency, secure enterprise deployments.